Implement an automatic visual inspection for defects with Tulip Vision and AWS Lookout for Vision

Visual inspection is a key aspect of maintaining quality of production, but is difficult to implement while staying lean, since the costs for dedicated human inspection resources are high. Manual visual inspection is also a high-churn operation; it's very repetitive and can be visually challenging.

Automatic visual inspection with cameras and machine learning completely change the tune. Visual inspection algorithms based on machine learning have advanced to the point where they surpass human performance, both in time and accuracy.

Using Tulip you can implement automatic visual inspection by connecting your Vision camera outputs to a first-in-class visual anomaly detection cloud service. Amazon's Lookout for Vision is one such service, which offers a powerful algorithm at a simple REST API endpoint that can be easily integrated to Tulip.

In this article we show you how to set things up quickly on AWS with Cloud Formation as well as within Tulip with a pre-bundled Library App. See the following video for a live tutorial on this process.

Prerequisites

Before beginning this process, please make sure that you have the following resources available:

- A USB camera connected to a Tulip Player PC (see the Tulip Vision hardware recommendation guide

- A Tulip Vision Camera Configuration for the connected camera, and a verified working video stream (see the getting started with vision guide

- Data collected with normal-anomaly annotation in a Tulip Table (see the data collection app)

- AWS account with a user permitted to create resources such as Lookout for Vision and API Gateway (see a getting started guide from Amazon)

Currently, Lookout for Vision is only available in specific AWS regions.

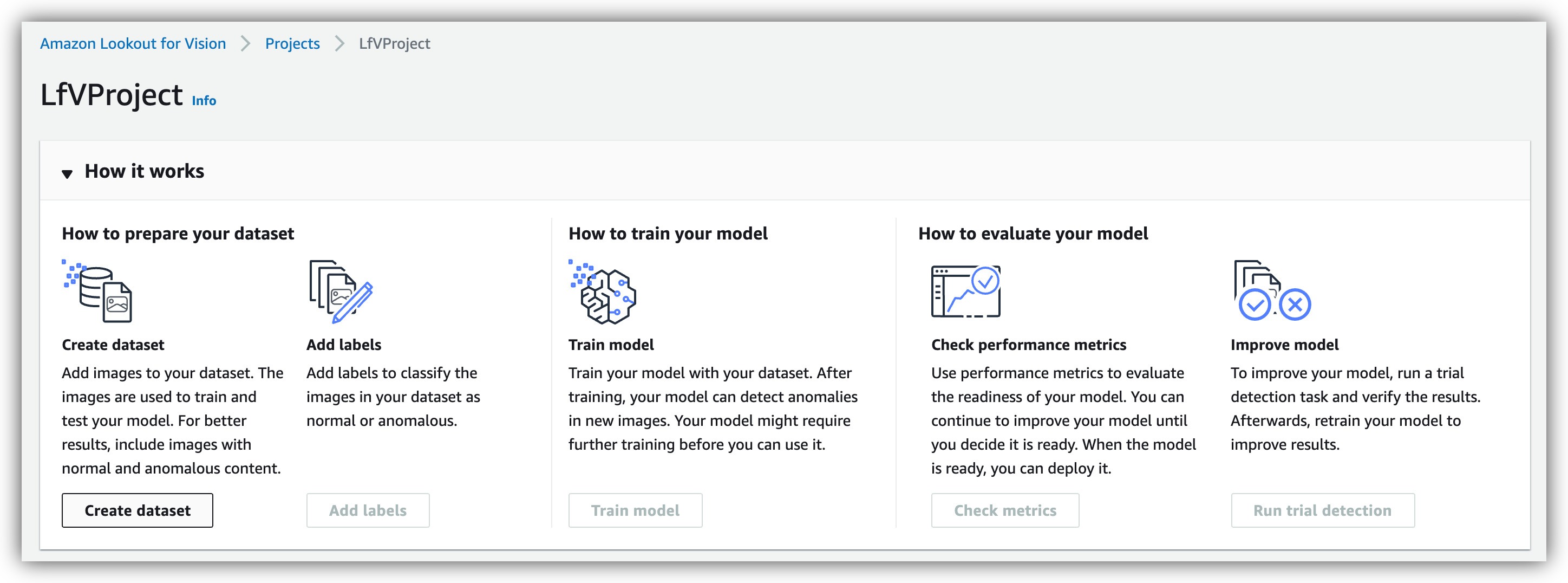

Set up lookout for vision in the AWS console

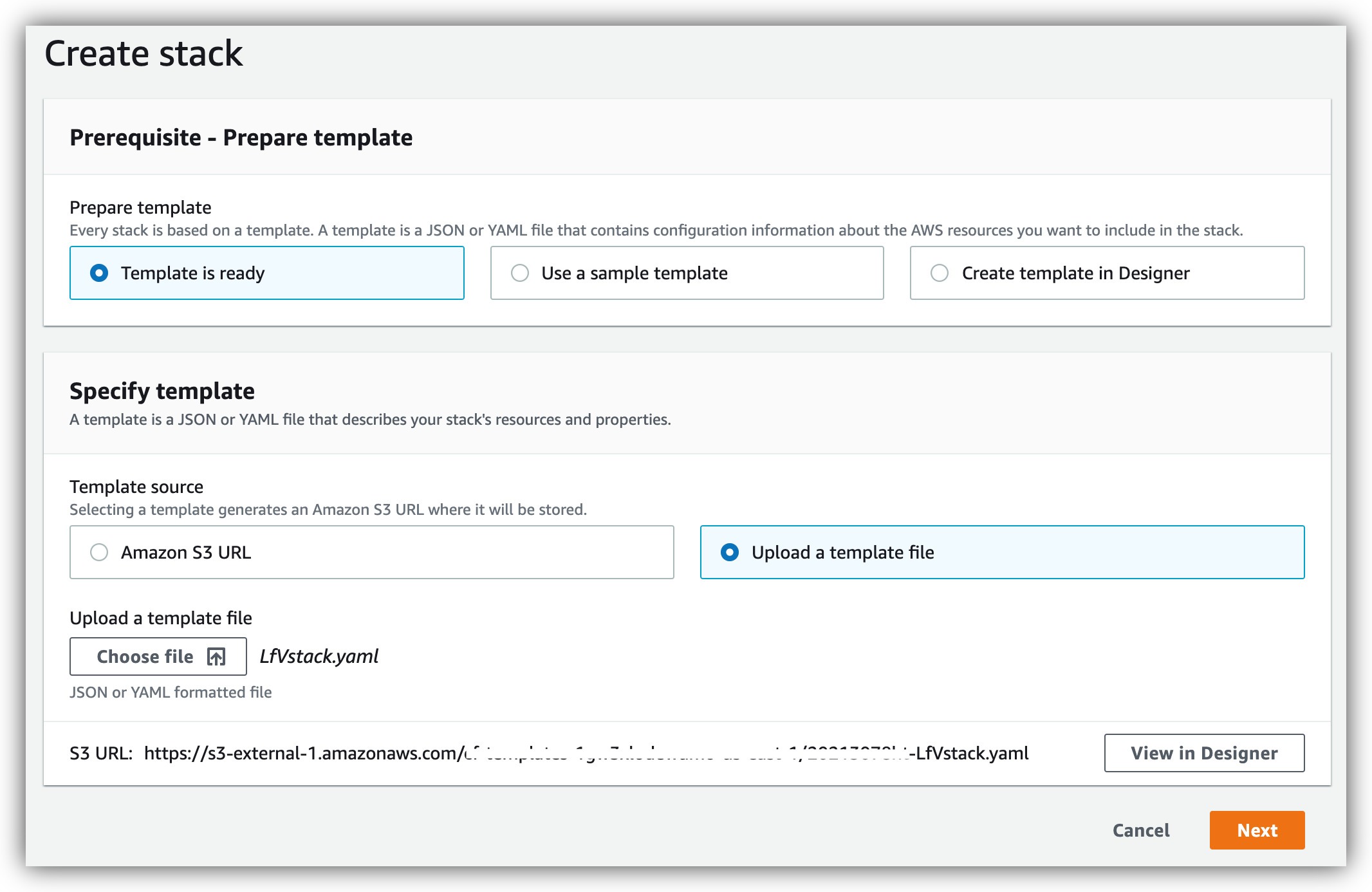

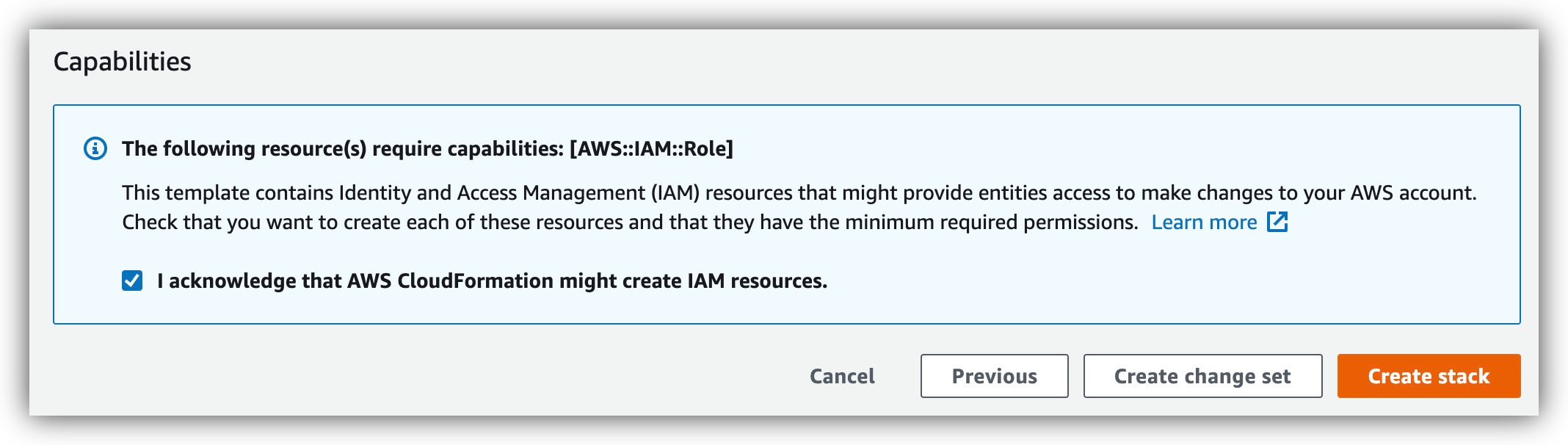

To assist in setting up the required cloud infrastructure on the AWS console, we provide a Cloud Formation Template (download). The CFT is a short script that provisions everything needed on the AWS cloud to train and run detection models from Lookout for Vision (LfV) with Tulip. It will create a LfV project, some AWS Lambda functions to activate the LfV model and an AWS API Gateway to communicate with the functions. Tulip will call the AWS API Gateway endpoints and thus run commands to the LfV model from within Tulip Apps - where the visual inspection is done.

- Download the CFT.

- Go on Cloud Formation on AWS console.

- Create a stack and upload the CFT file.

- Run the CloudFormation stack creation script.

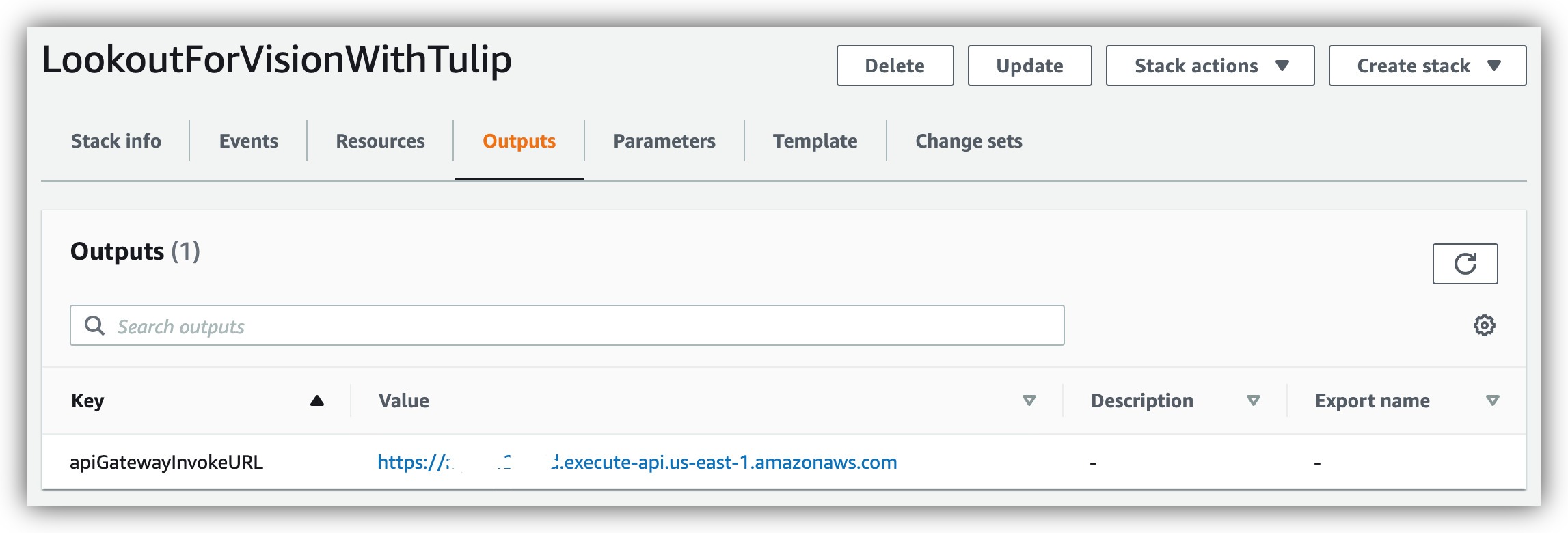

- Note the outputs.

- Write down, or note otherwise, the apiGatewayInvokeURL value, as we will be using it again shortly to set up our Connector Functions.

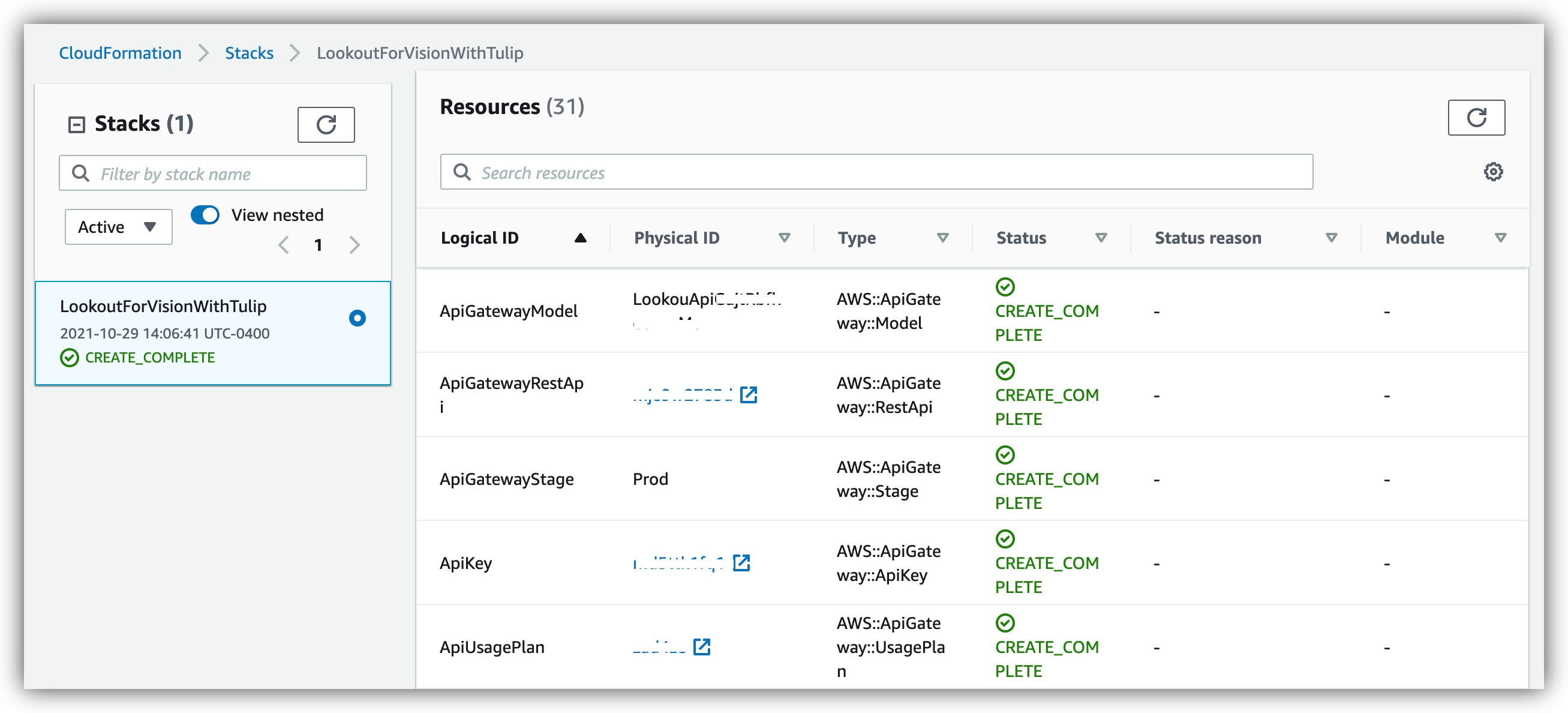

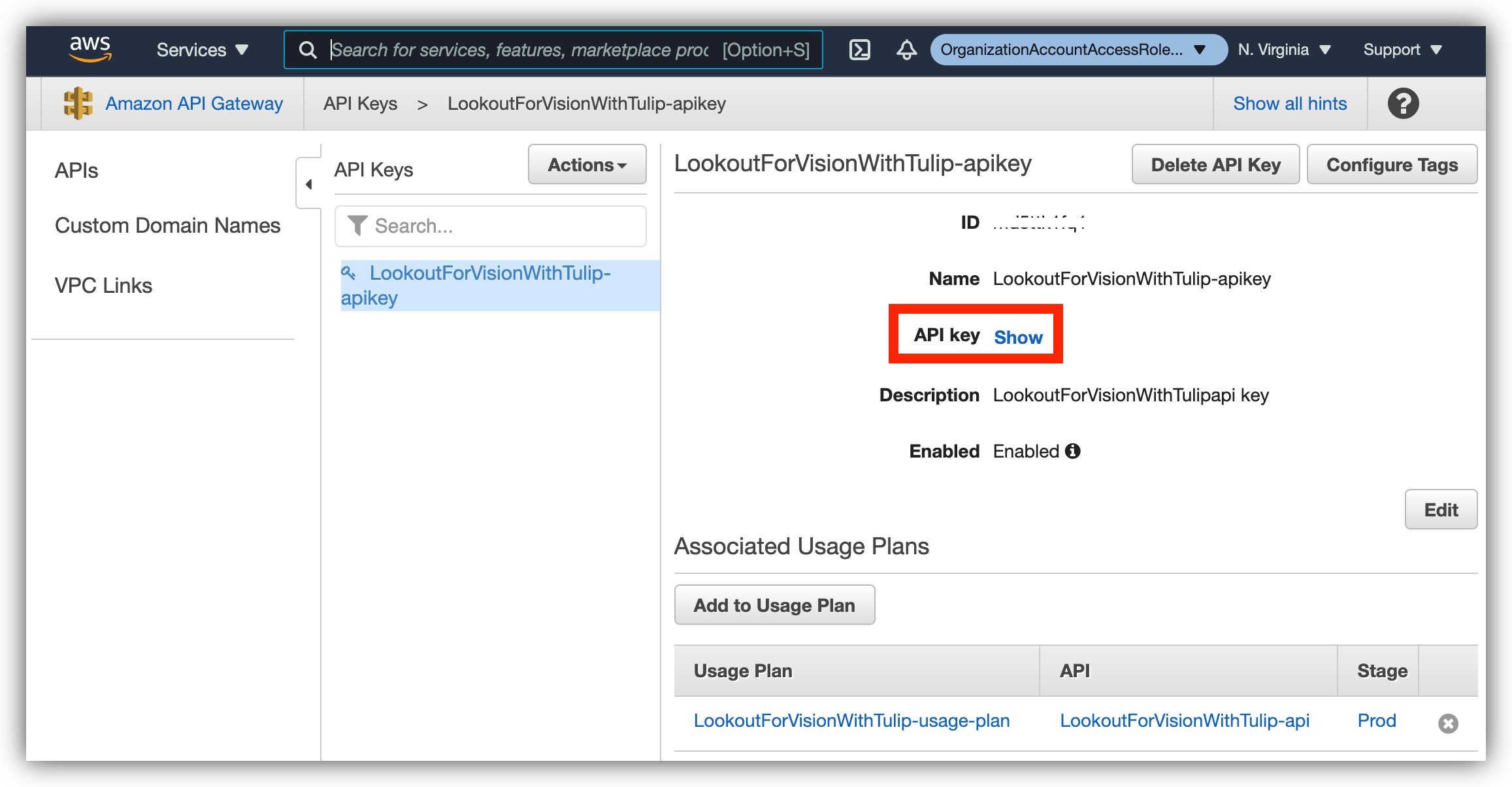

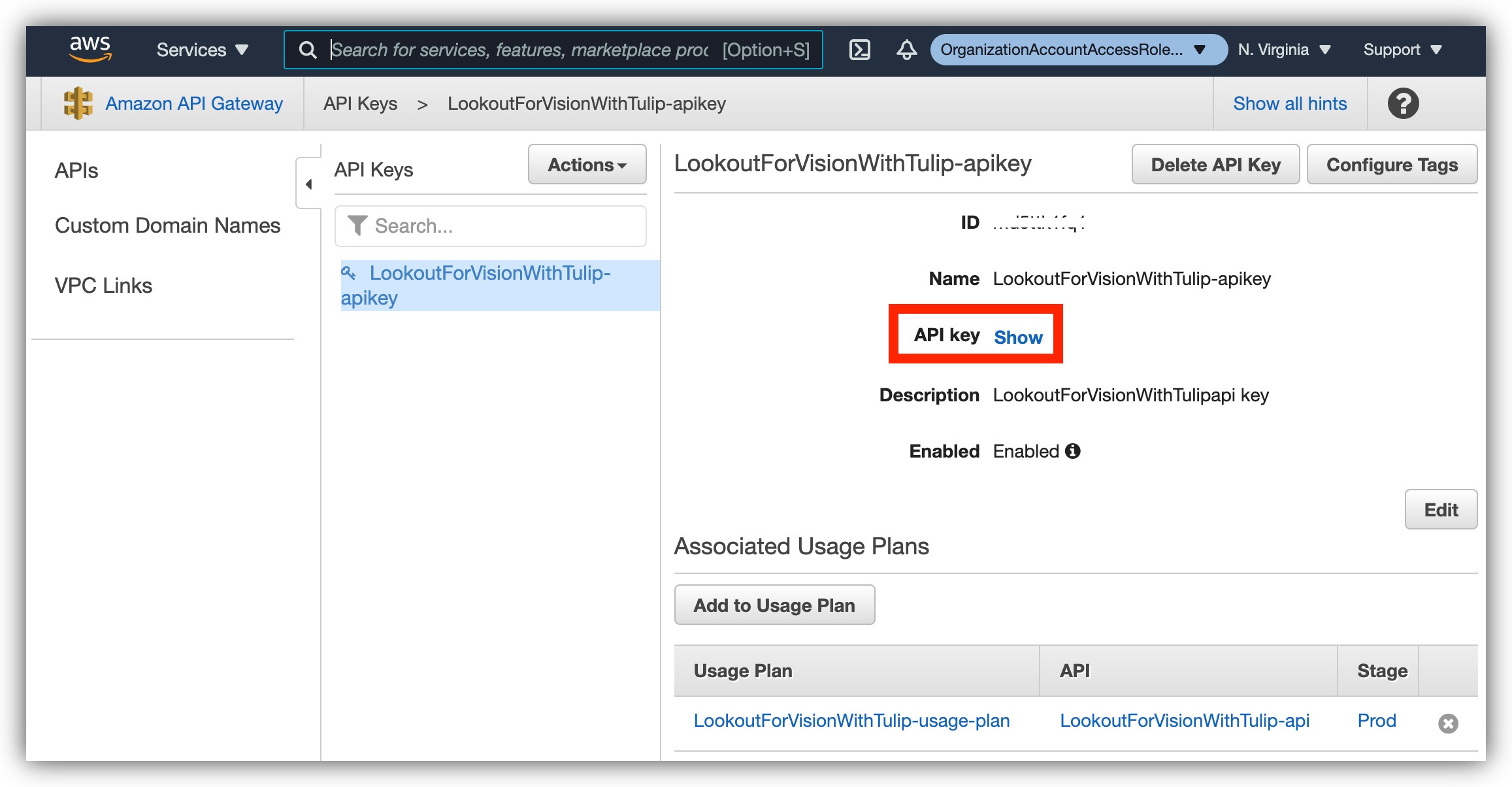

Also write down the API Key from the Resources tab. You will need to click on it and find the value.

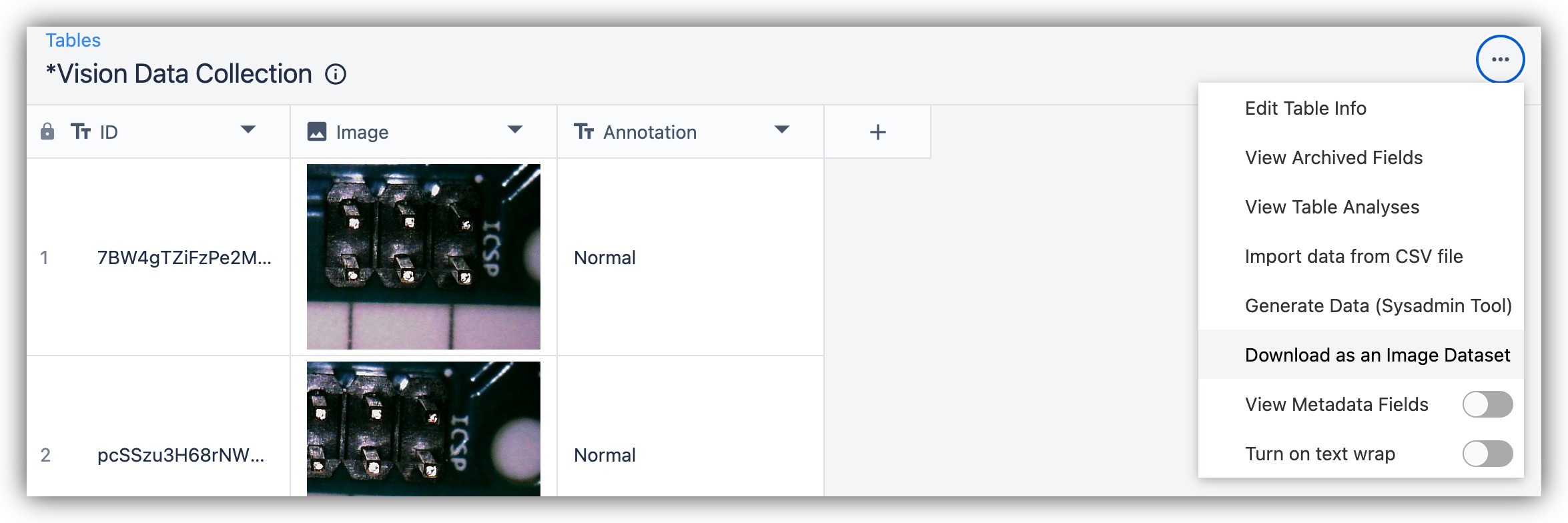

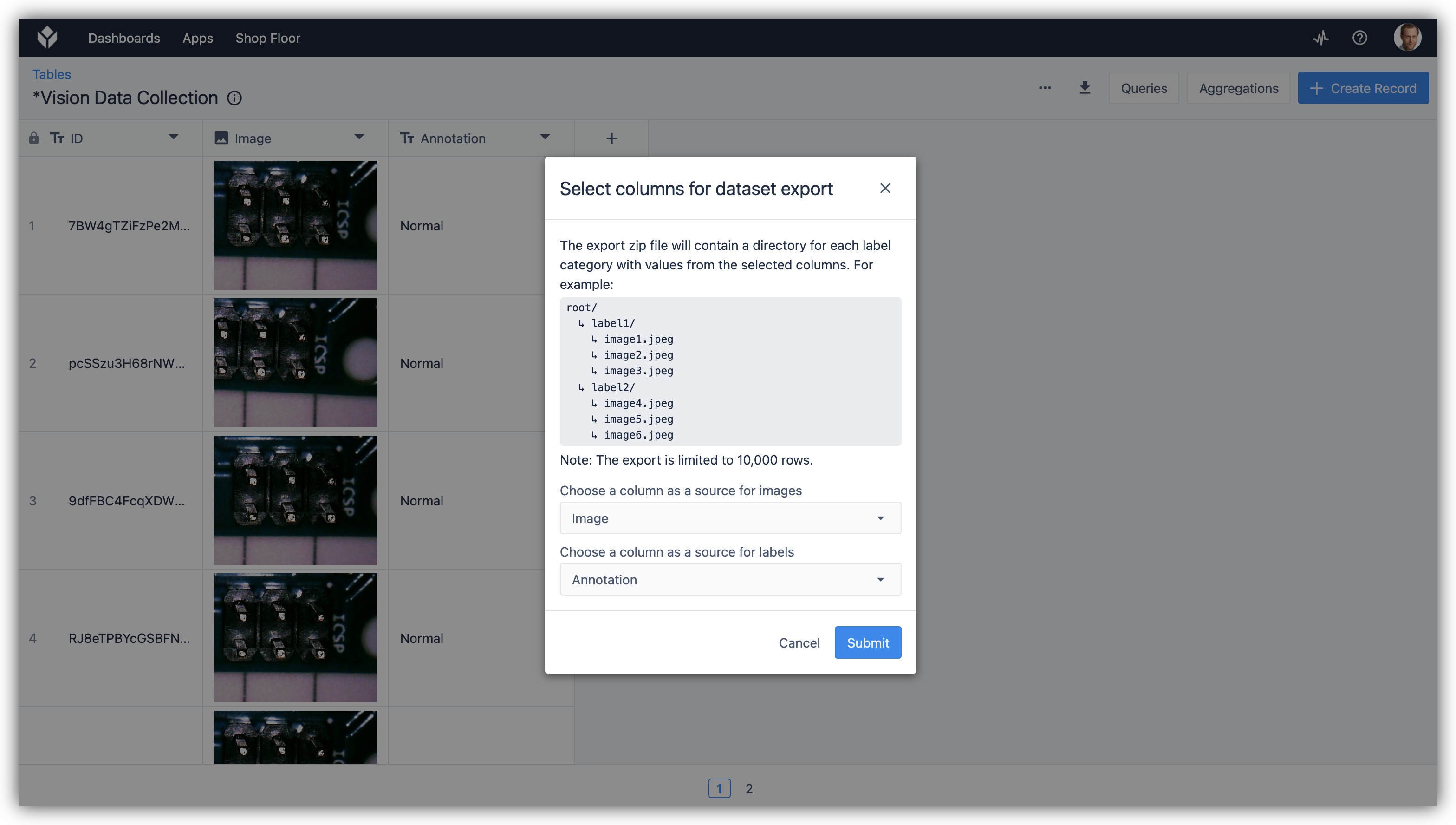

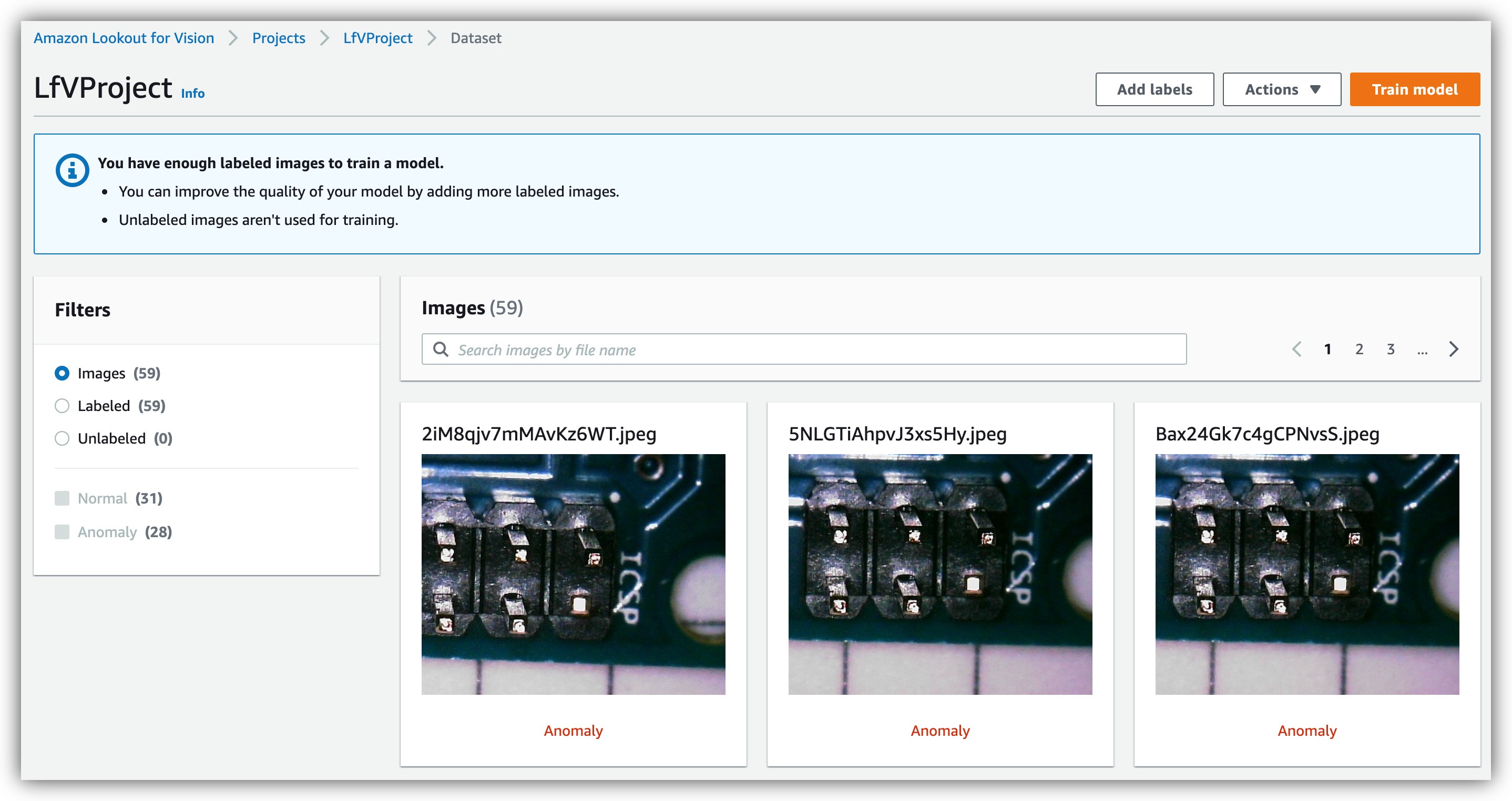

Now that AWS was provisioned by Cloud Formation, we can go ahead and train a visual machine learning model with Lookout for Vision. To that end we must have an annotated dataset of images. While you could create the dataset manually, we offer an easy way to collect data with Tulip using our Data Collection app [link]. The data is stored in a Tulip Table and easily exportable from it. We will assume that a dataset was collected in Tulip for the next few steps.

- Export dataset from Tulip Table.

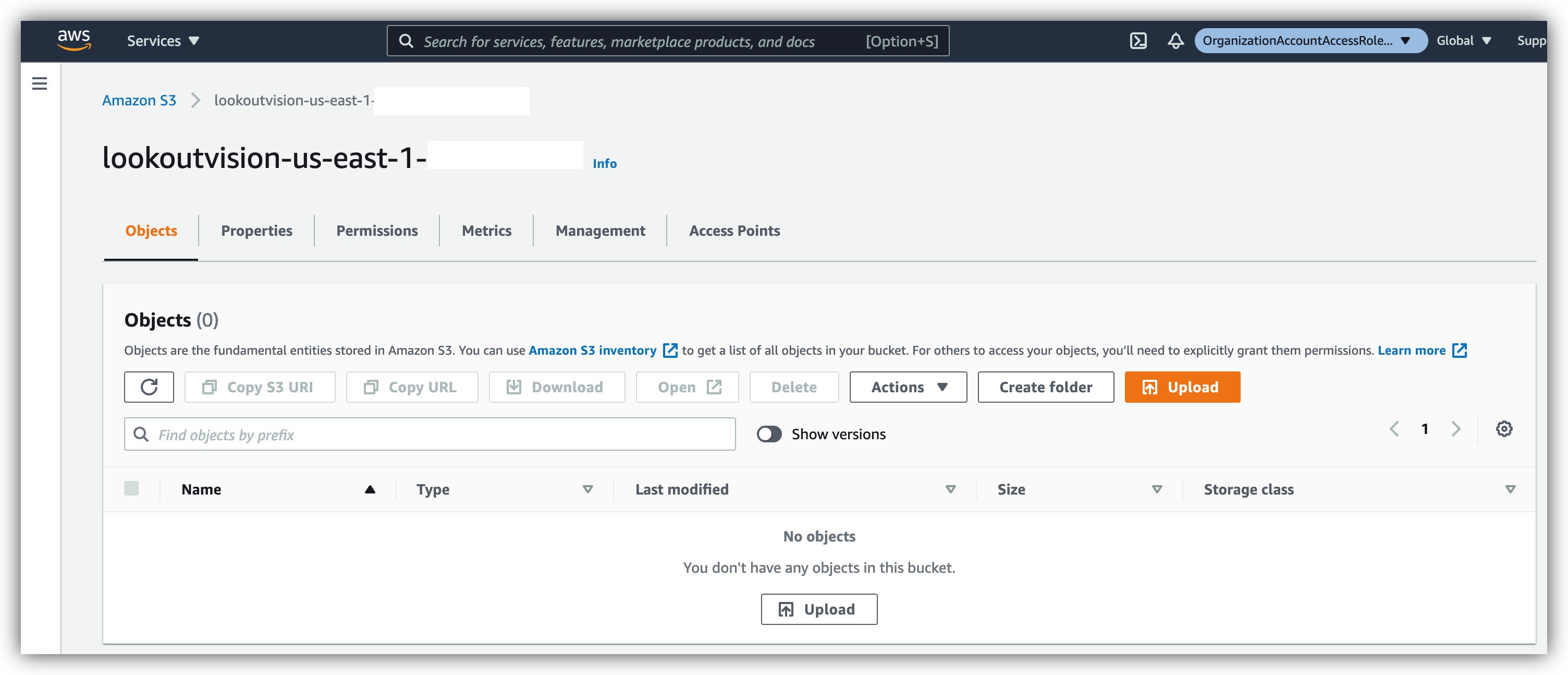

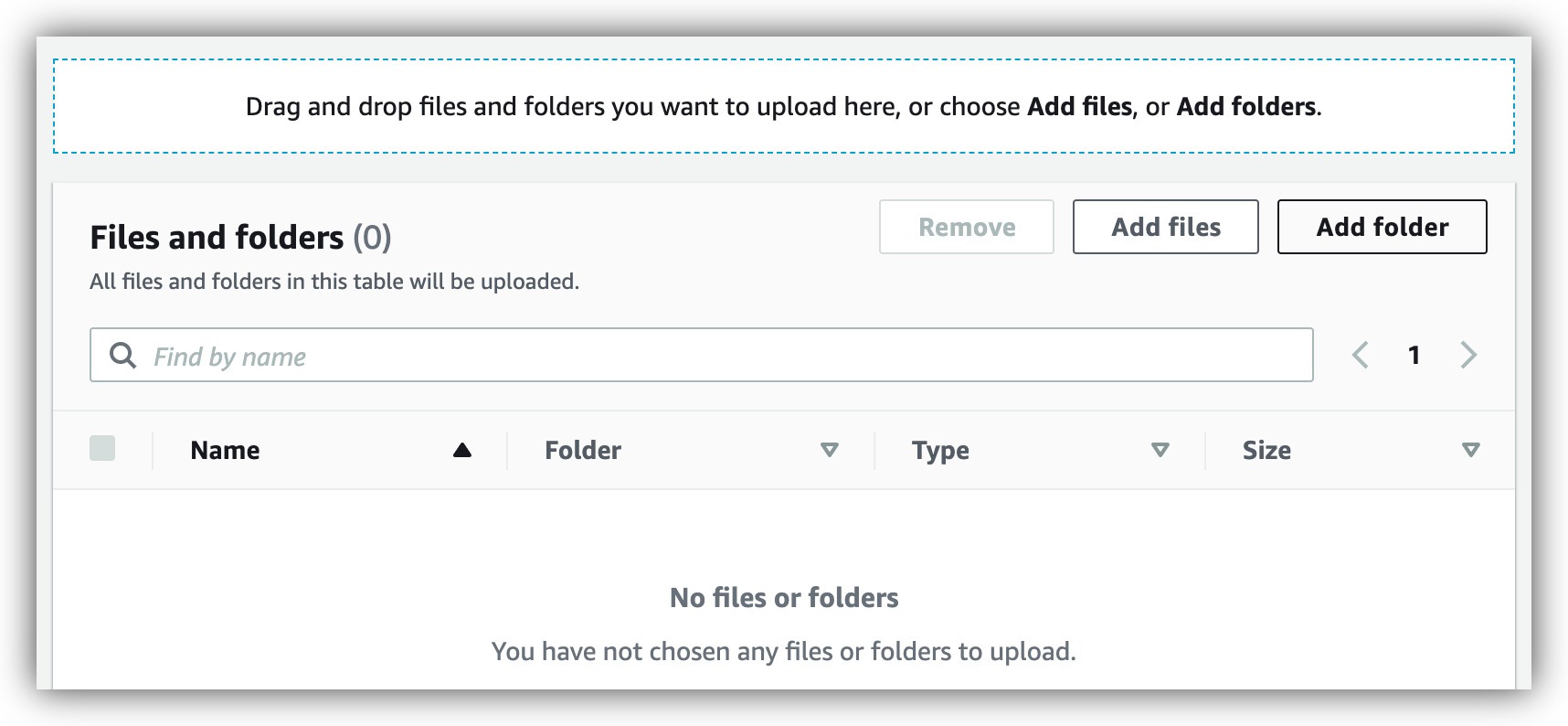

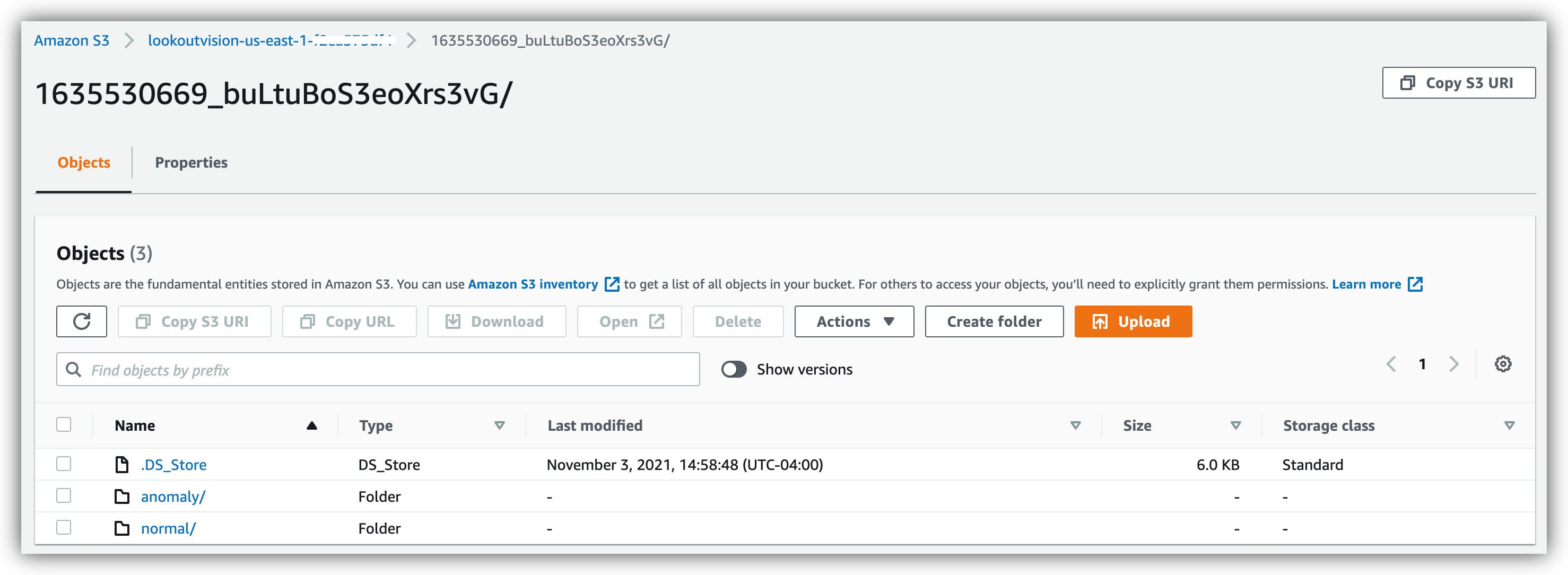

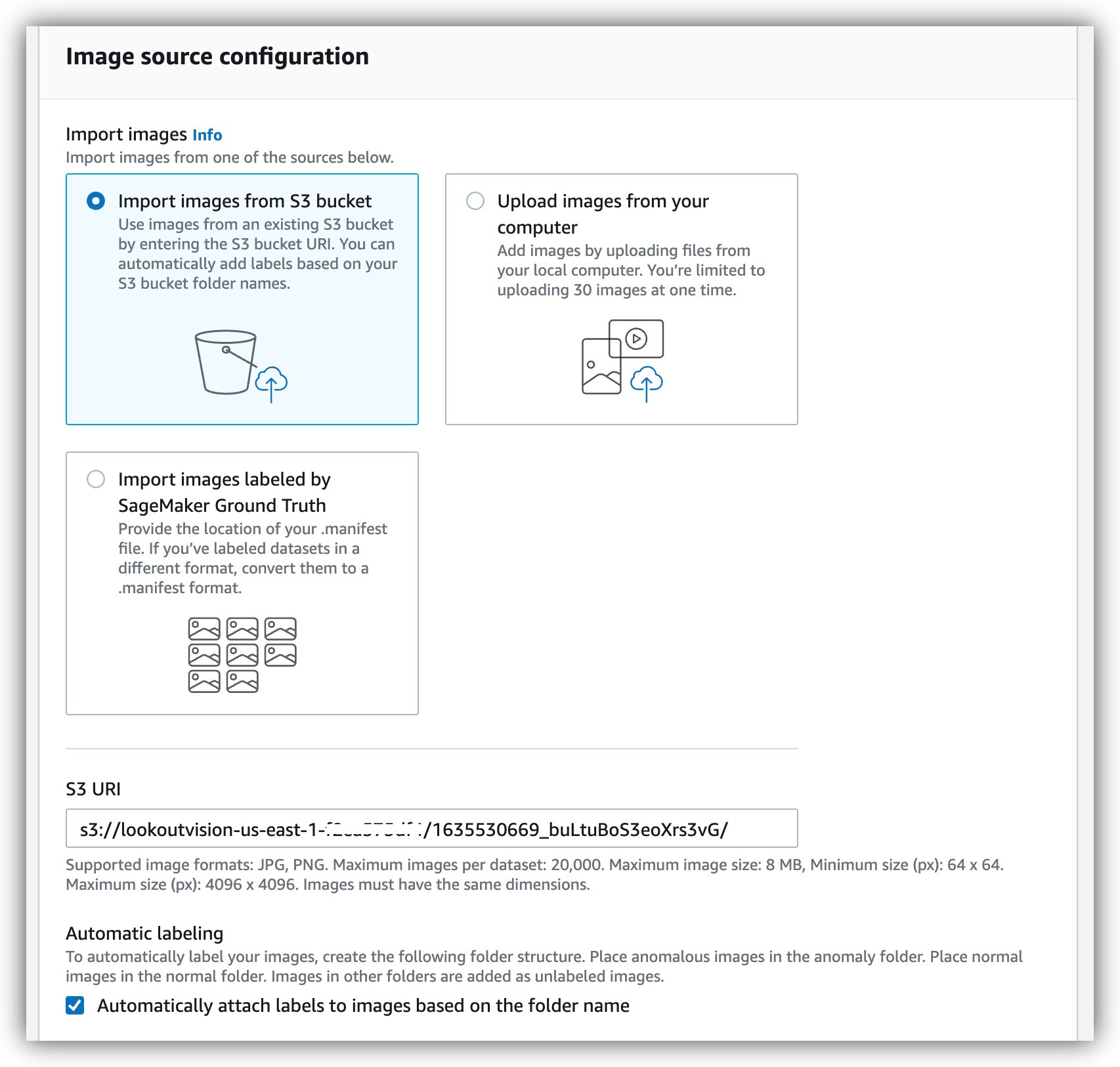

- Upload the dataset to the S3 bucked created by Lookout for Vision.

- Create a dataset on Lookout for Vision and select the S3 bucket as a source.

Make sure to select Automatically attach labels:

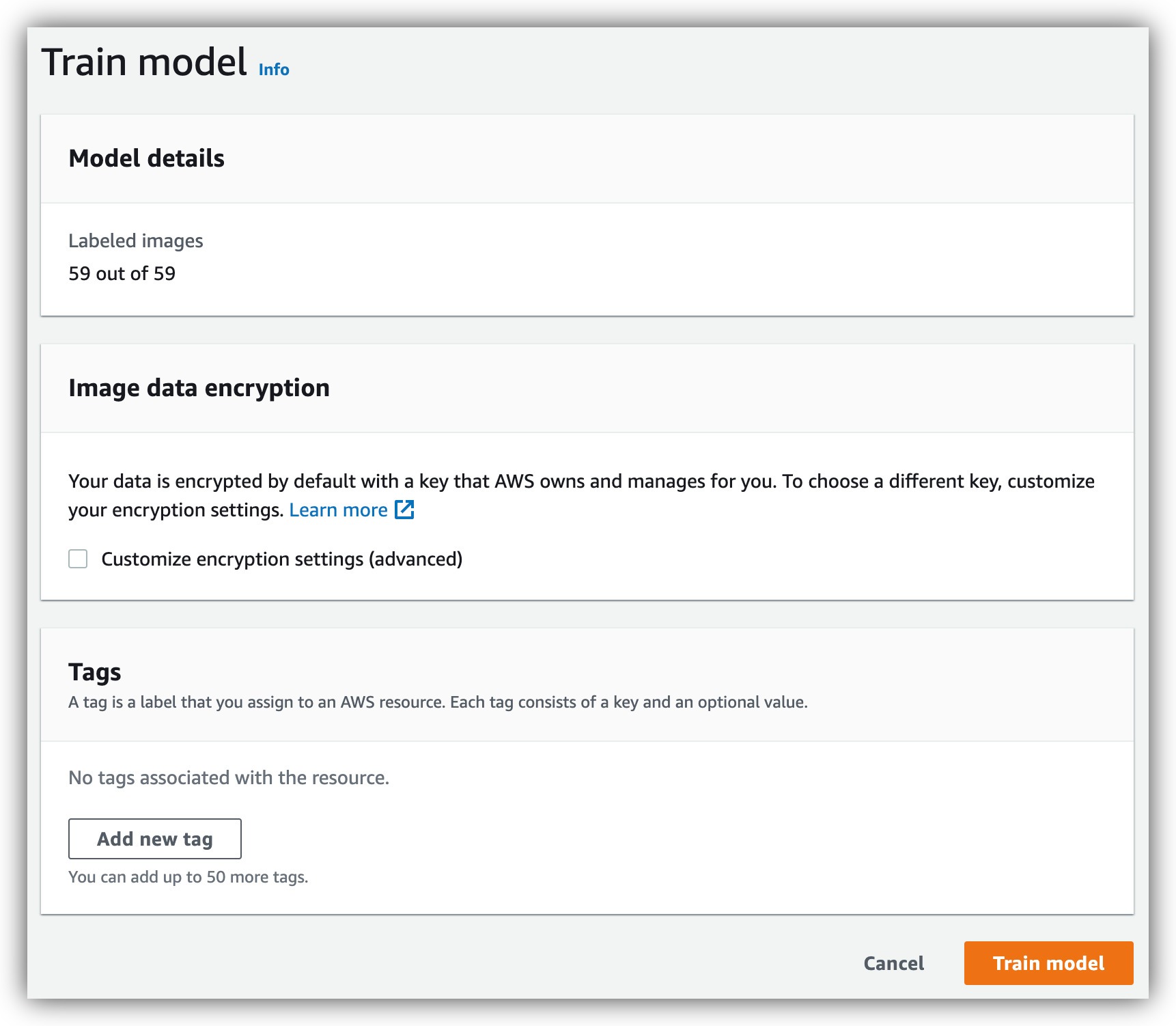

Now that a dataset is ready in Lookout for Vision - we can train a model. The process takes roughly 45 minutes, and is an easy process on the AWS console.

- Click Train Model.

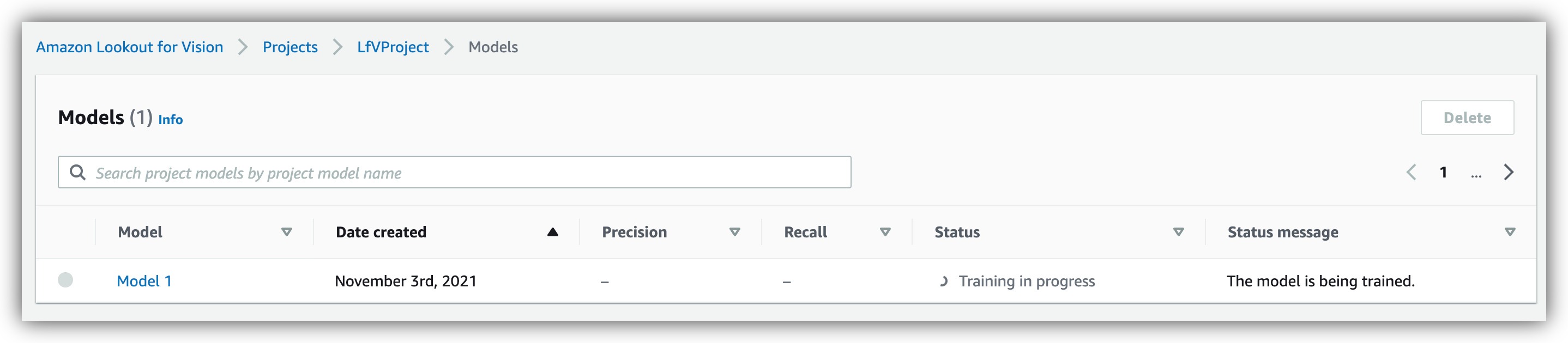

- Check on the training status.

- Review training results.

Once the model has finished training, there is no more that we need to do on the AWS side. All the connections have been made for us by the Cloud Formation, and we are clear to connect Tulip to Lookout for Vision.

Set up Tulip to call Lookout for vision models

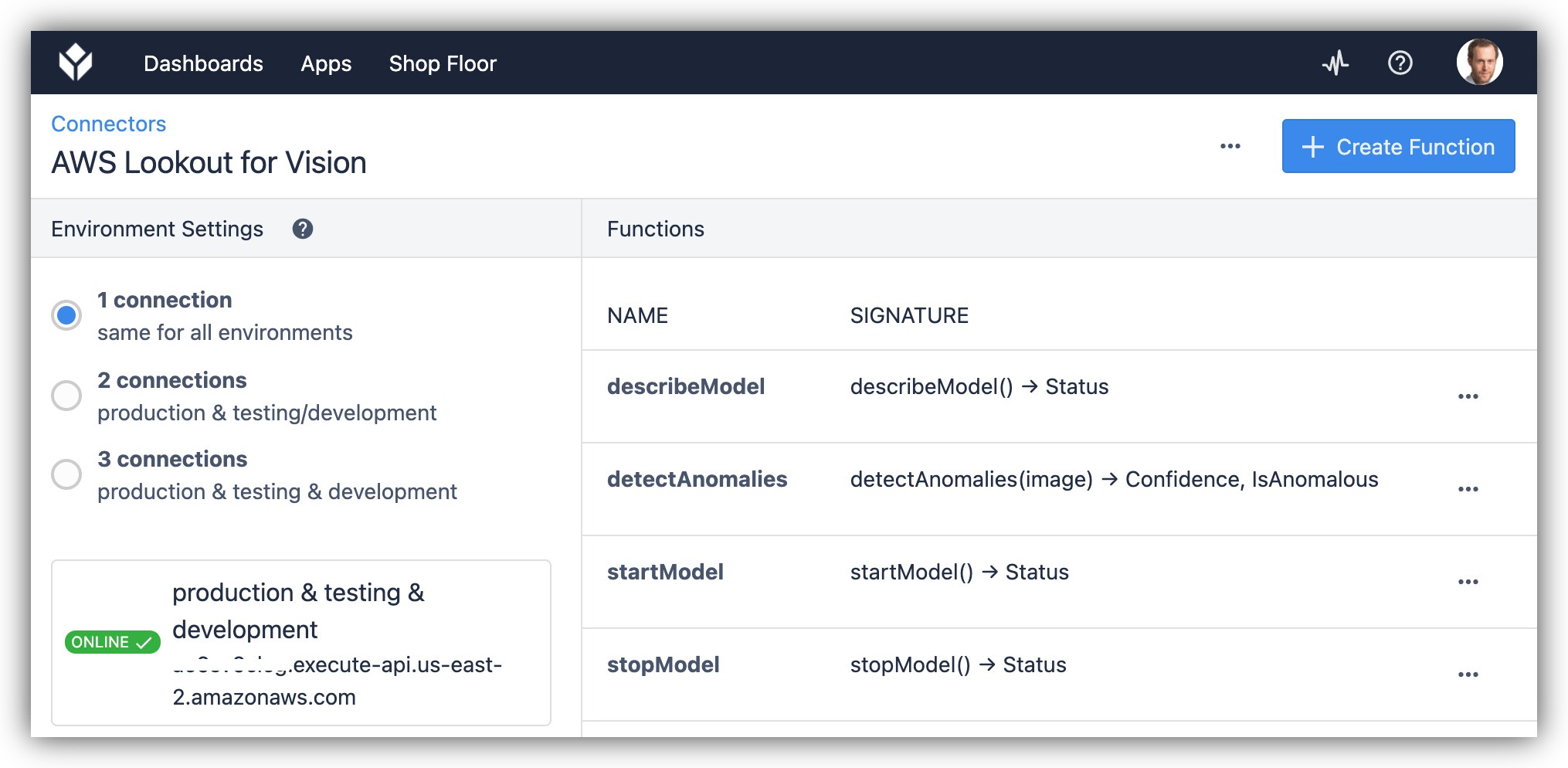

Tulip is highly capable of integrating to external services via APIs, which is why it is easy to integrate it with AWS Lookout for Vision. We will use the REST API endpoint from the last section to control the LfV model through Tulip Connector Functions. However, instead of creating the functions from scratch we are providing them pre-built in our "Defect Detection with Lookout for Vision" Library App. This will save you a lot of time, and make sure the connectors are built correctly. First, however, We will need to populate the connector function with the right data from your specific AWS account.

- Find the Connector Functions in Tulip.

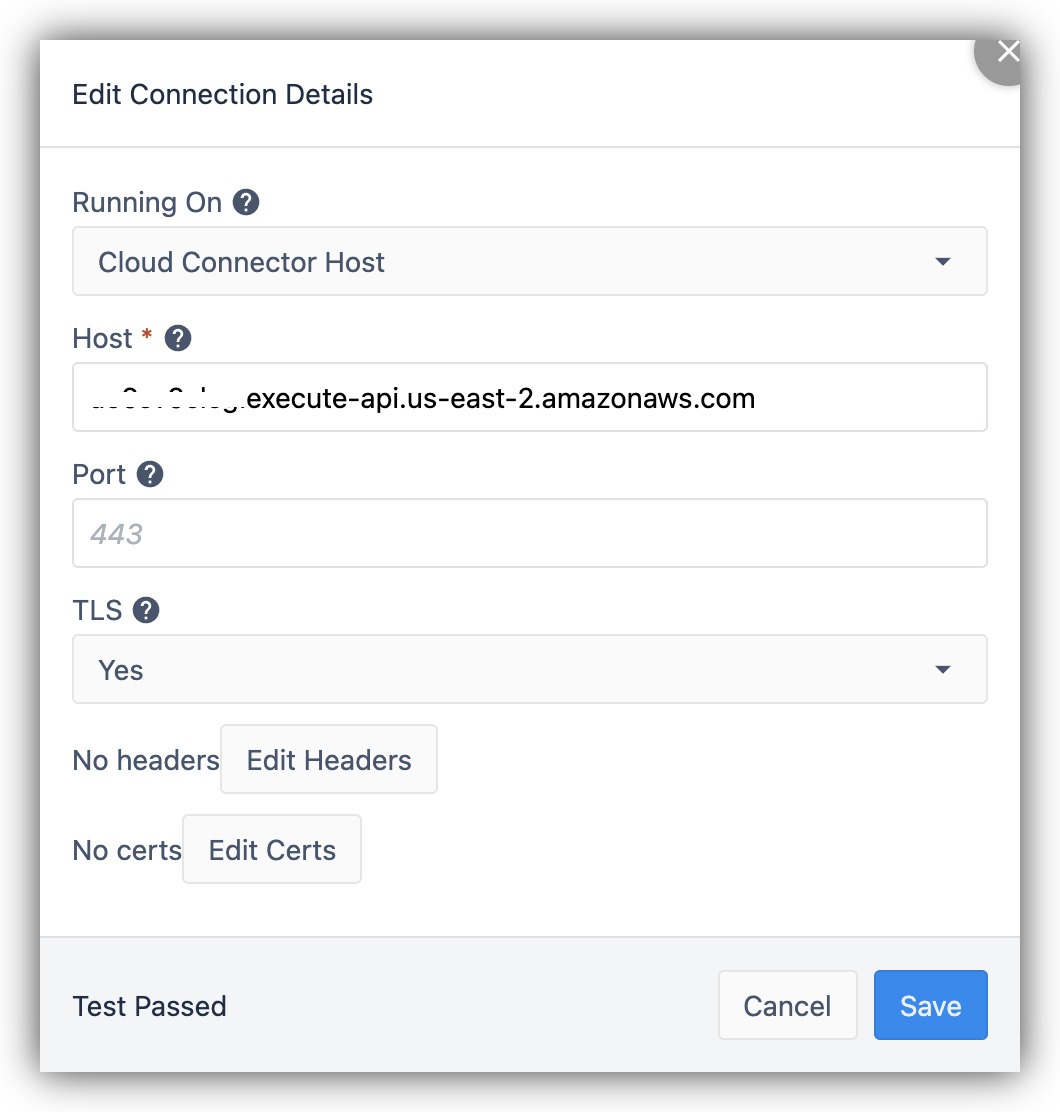

- For the Connector set the correct endpoint URL from AWS, which you have copied earlier from Cloud Formation:

- For each function set the API KEY from AWS.

Make sure you do this for all 4 functions.

Use Lookout for vision in Tulip apps

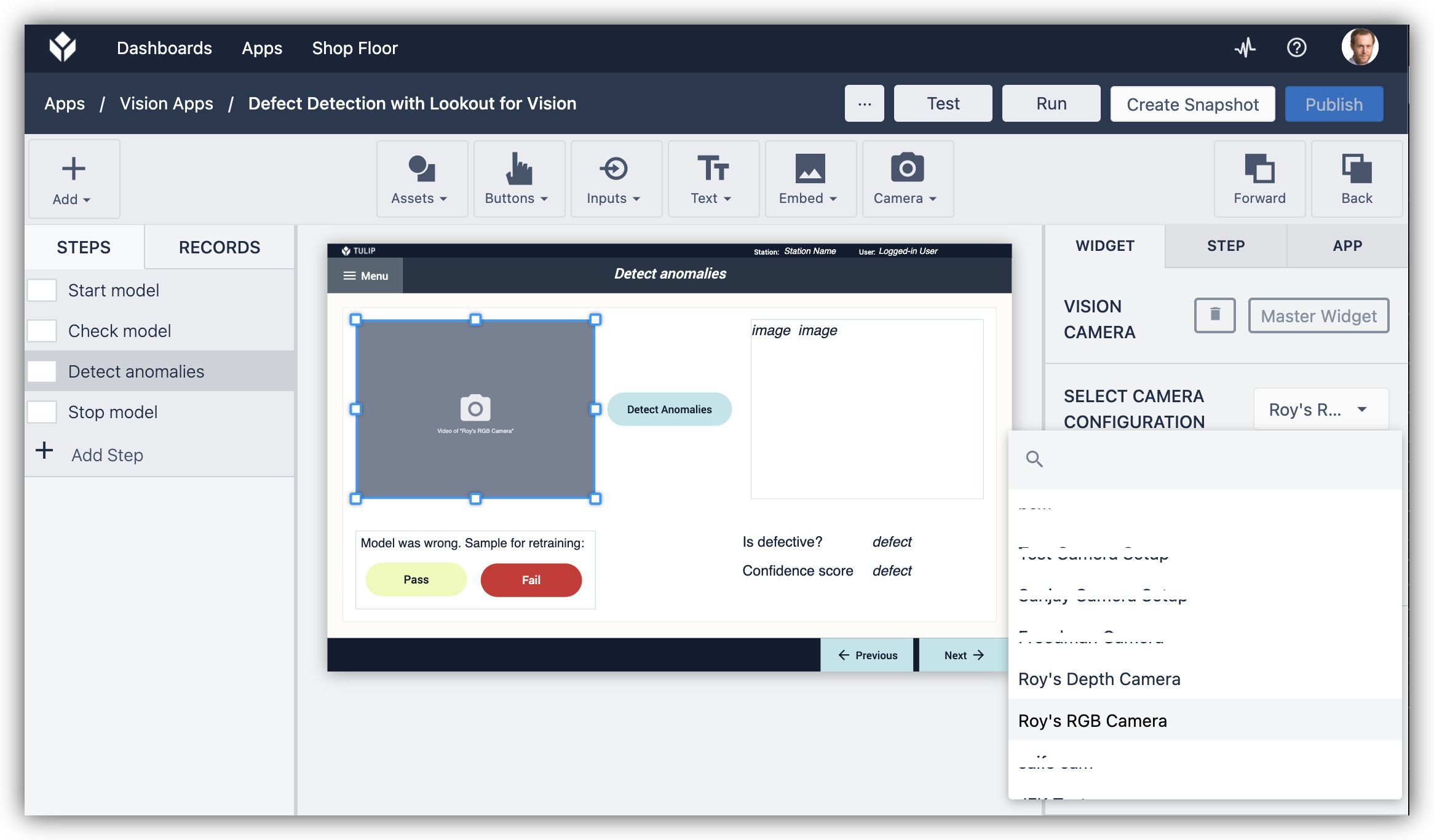

In the provided Defect Detection app from the library we have set up a very rudimentary process for running visual inspection. You can modify the app or copy parts of it to other apps to suit your needs. To use the app, you will need to make some small modifications to work with you specific Tulip Vision setup. You'd need to point the capture trigger at your Vision Camera Configuration, as well as the camera preview widget for visual feedback.

- Set up the Tulip App camera Widget with the right camera configuration.

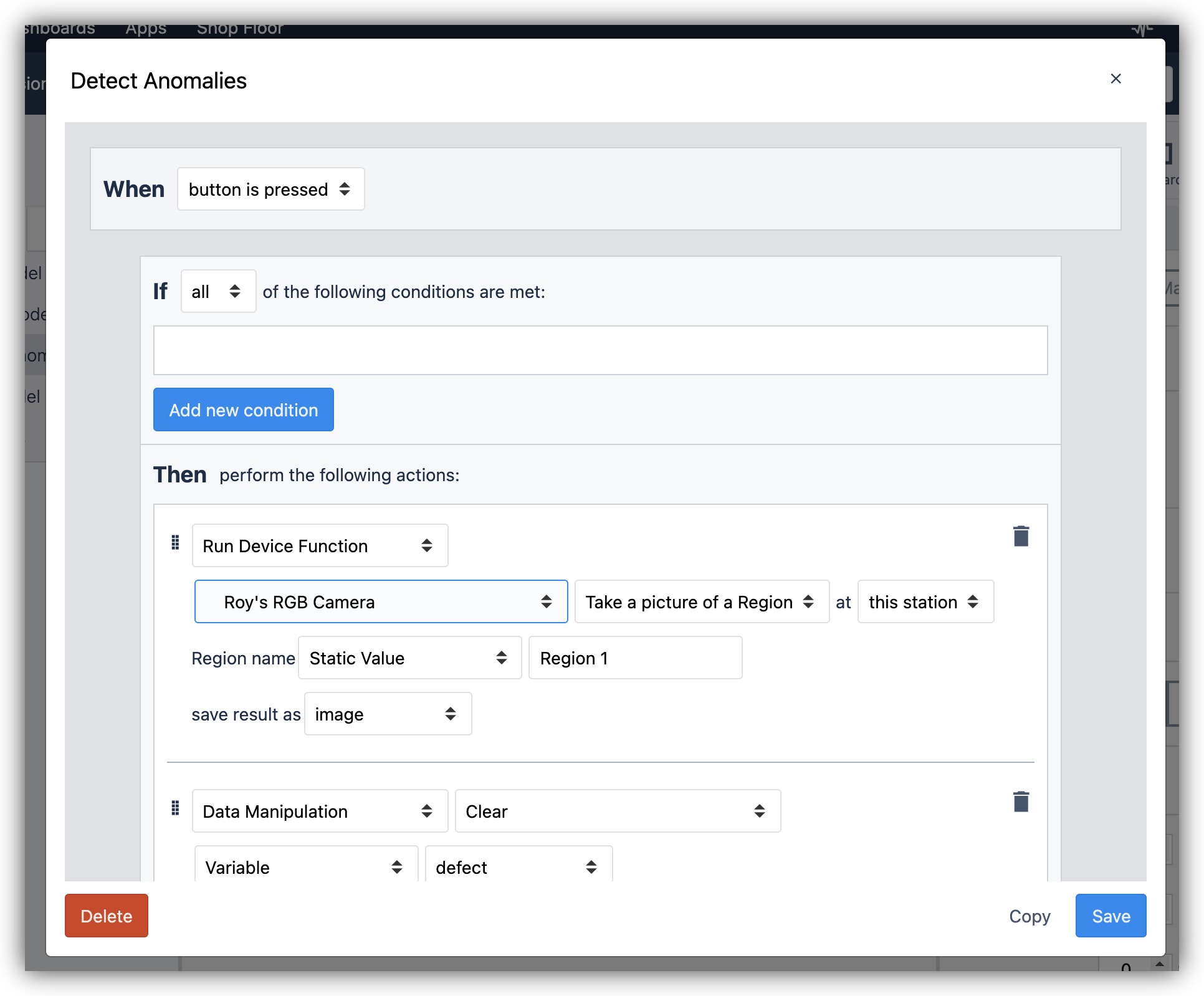

- Set up the "Detect Anomalies" Trigger with the correct camera configuration.

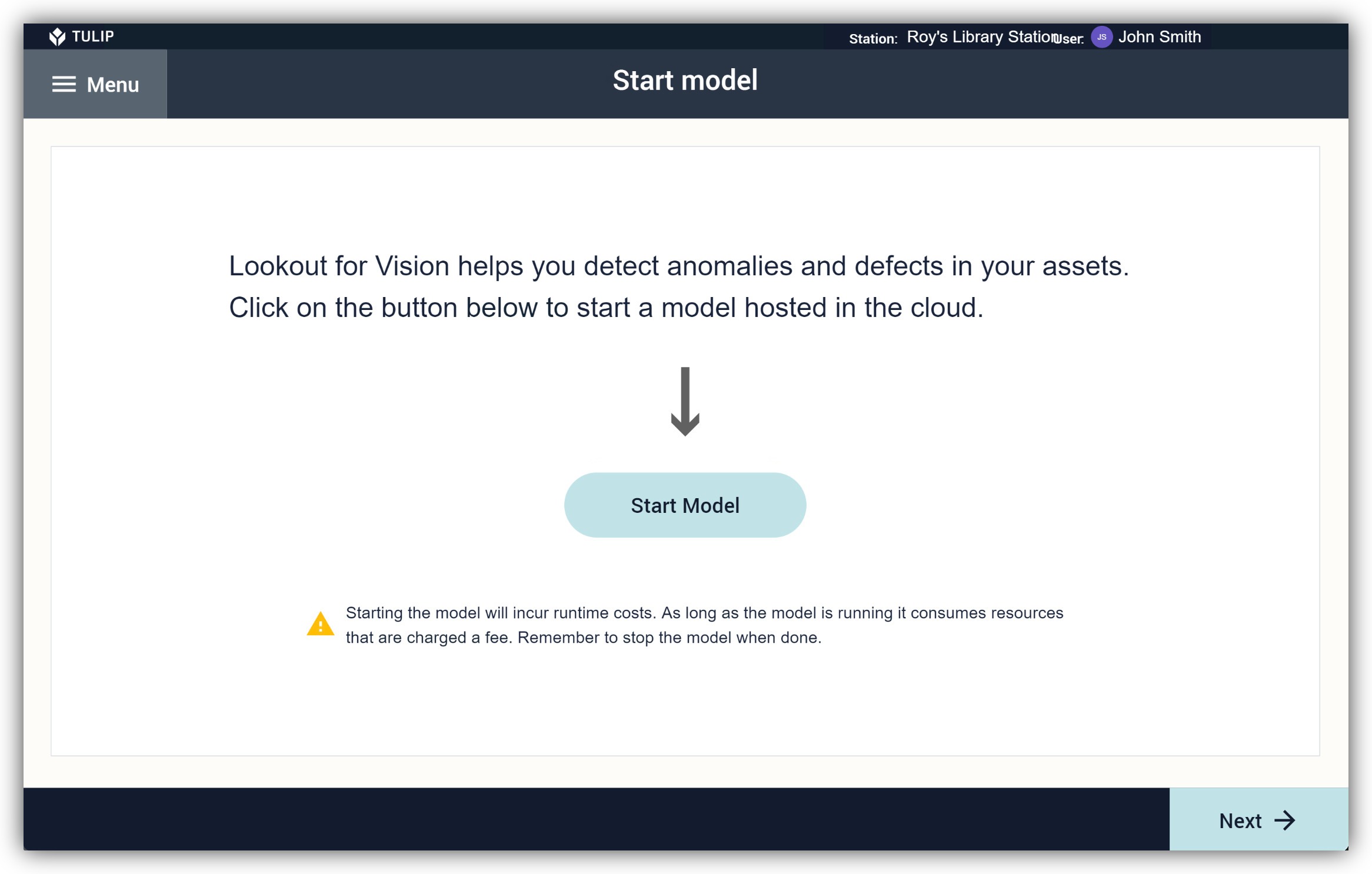

Now all is set for running your model on Tulip. Before the model is available for inference (evaluating an image) it needs to be "Hosted", which means it will occupy some cloud computation resource, like a cloud virtual machine, to serve the model to your app. We have created button on the provided Tulip App to do that.

Once the model is "Hosted" it is consuming resources that have a cost attached to them. Remember to turn off your models whey they are not in use, or you are risking spending money for no return. AWS LfV pricing can be learned about in here. Keep in mind AWS also offer a Free Tier for LfV. Tulip is not responsible for resources hosted privately on AWS, but you are welcome to contact us with inquiries.

Go ahead and run the Defect Detection app on the Player PC with the connected inspection camera. The following steps are done on the running app:

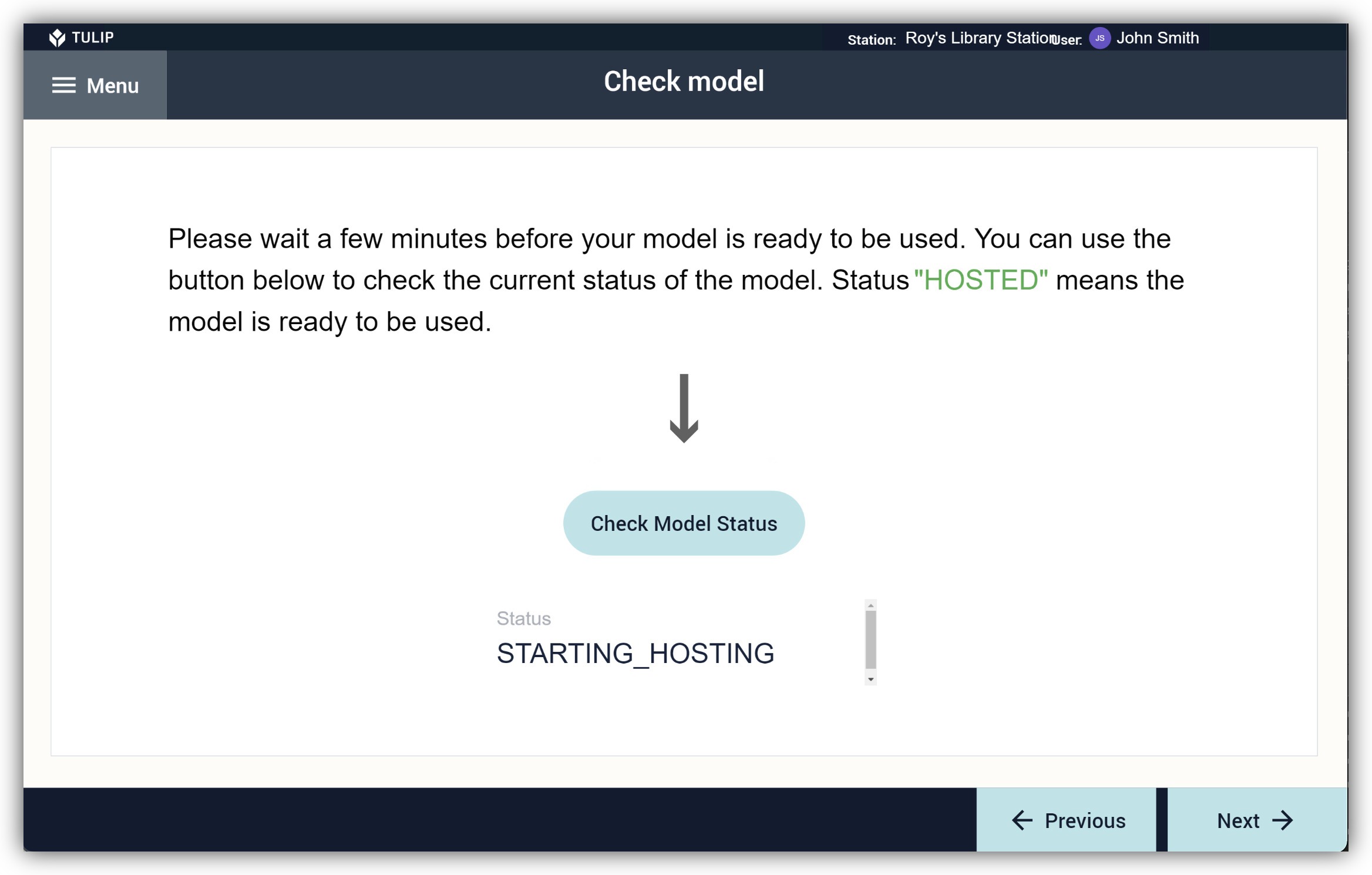

- Turning the model ON ("Hosting").

Once you start the model it will begin incurring costs. Remember to turn it off.

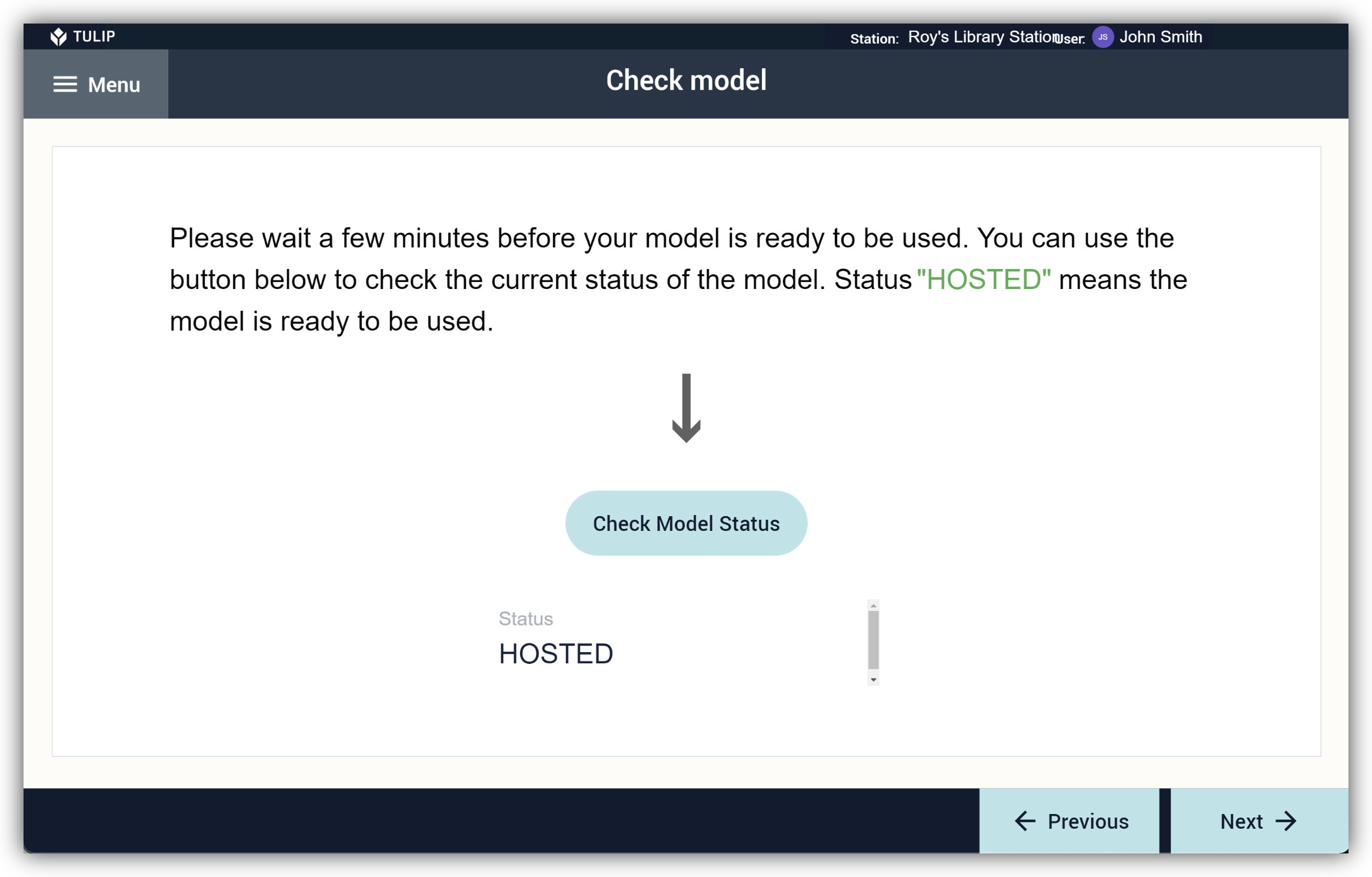

- Check for the model hosted status.

Look for the "HOSTED" status. While the status is not "HOSTED" the model will not accept queries.

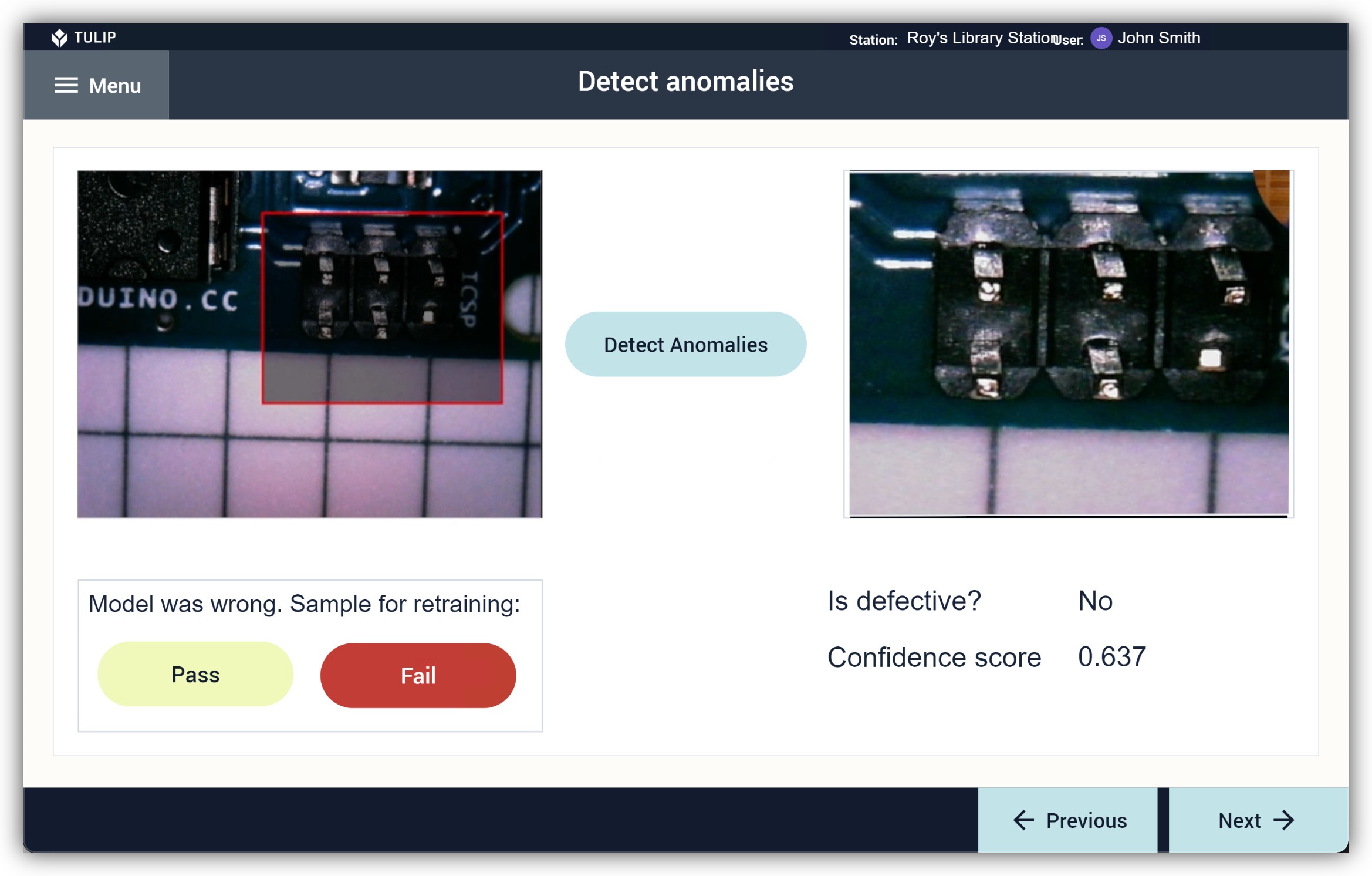

- Running an inference request on the model.

The model should now be making predictions on whether the object is a defect or a normal part. We have added buttons to give feedback on the detection accuracy in case the model makes the wrong prediction. These buttons will add more data to the Tulip Table and your dataset. Periodically re-train the model by providing it with more sample images, to increase the robustness of your model.

Conclusion

We have seen an easy to follow process for getting started with machine learning based automatic visual inspection on Tulip. This process can save you on manual resources for visual inspection done by a person. Applying this process also inherently gives you a visual dataset of all the defects that arise in your product, which you may use in another way than inspection.

You can easily apply and implement machine learning with Tulip using its connectivity features. Opt to use other cloud ML services in a very similar way.

Further reading

- Vision Snapshot feature

- Connector functions guide

- Using Azure CustomVision.ai with Tulip for Visual Inspection

.gif)