Enable external models for a variety of other copilot options

Purpose

This article walks through how to use custom bedrock models and endpoints via API Gateway and a simple Lambda function for invoking the models.

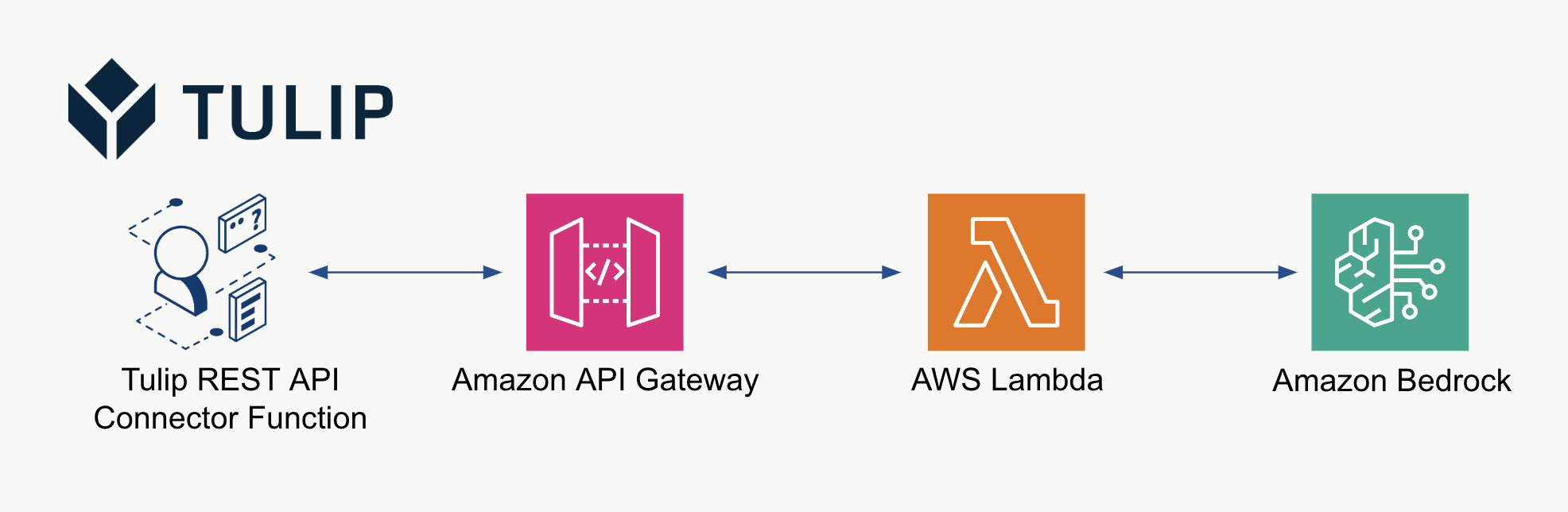

High-level Architecture

Below is a summary of a high-level architecture for using customer-tenant custom Bedrock models:

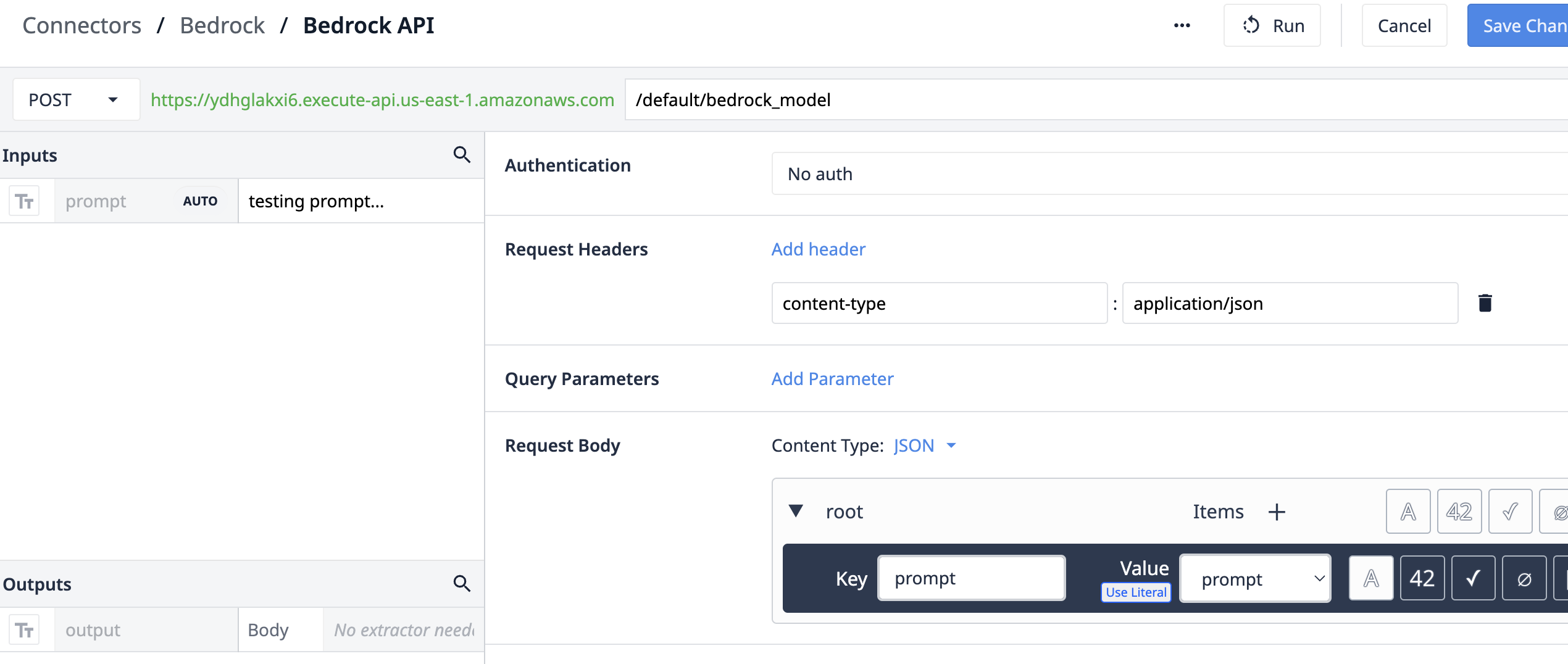

The example architecture and lambda function (See below section) can be leveraged by a Connector function such as the one below. NOTE: A variety of authentication methods such as OAuth2.0 can be used to secure the API Gateway used.

Example Lambda Function

Below is an example script of a lambda function to invoke a custom model in Amazon Bedrock. This script can be used a a starting point to create a custom inference for a custom Bedrock model.

import json

import boto3

import logging

logger = logging.getLogger(__name__)

def lambda_handler(event, context):

print(event)

brt = boto3.client(service_name='bedrock-runtime')

event_dict = json.loads(event['body'])

prompt = event_dict['prompt']

body = json.dumps({

"prompt": f"\n\nHuman: "+prompt+" \n\nAssistant:",

"max_tokens_to_sample": 300,

"temperature": 0.1,

"top_p": 0.9,

})

modelId = 'anthropic.claude-v2'

accept = 'application/json'

contentType = 'application/json'

response = brt.invoke_model(body=body, modelId=modelId, accept=accept, contentType=contentType)

response_body = json.loads(response.get('body').read())

completion_output = response_body.get('completion')

return {

"statusCode": 200,

"body": completion_output

}

Scale Considerations

A prime case for leveraging custom models is when you require training data outside of Tulip on your own tenant of AWS. This data can include supply chain data, procurement data, and other data sources that extend beyond core manufacturing. This creates an opportunity for leveraging custom models in Amazon Bedrock, but it is vital to have a strategy for scale including invoking custom models, model tuning, and more.

Next Steps

For further reading, please check out the Amazon Well-Architected Framework. This is a great resource for understanding optimal methods for invoking models and inference strategy at scale