To download the app, visit: Library

Use LandingAI's LandingLens to perform rapid object detection.

Purpose

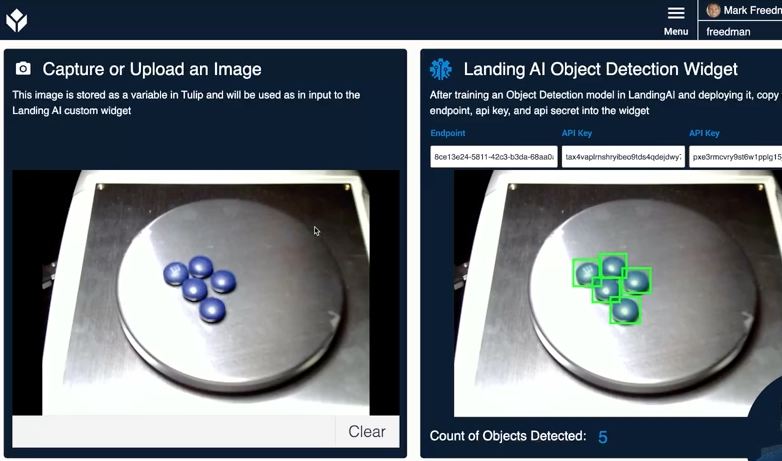

This Widget allows you to access Vision / machine learning models that you've created within LandingLens. With this widget, you can take a picture in Tulip and send it to your LandingLens endpoint, returning a count of objects found by the AI model.

Setup

In order to use this widget you will need an account with https://landing.ai/ . For full documentation on how their platform works, please refer to their support documentation

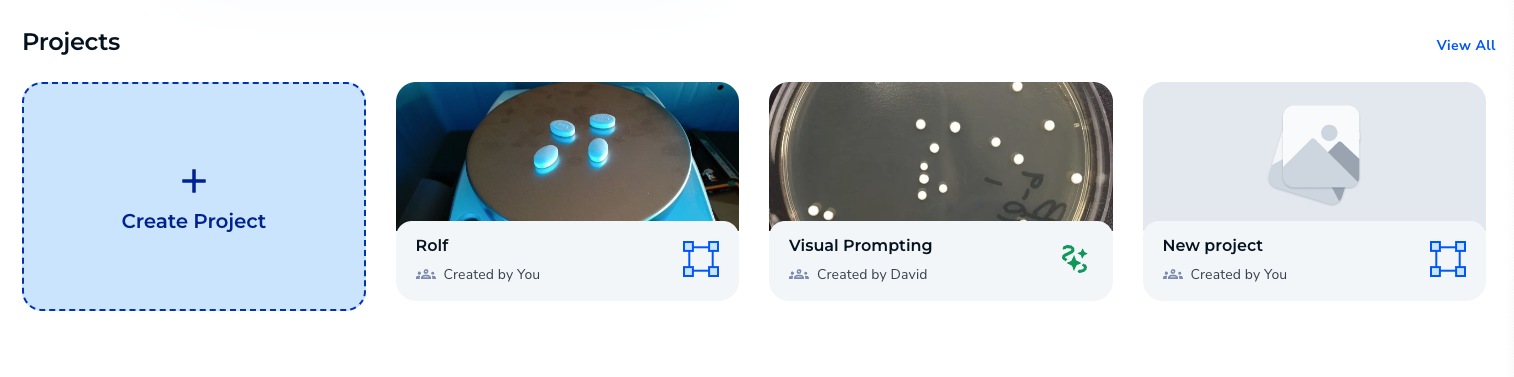

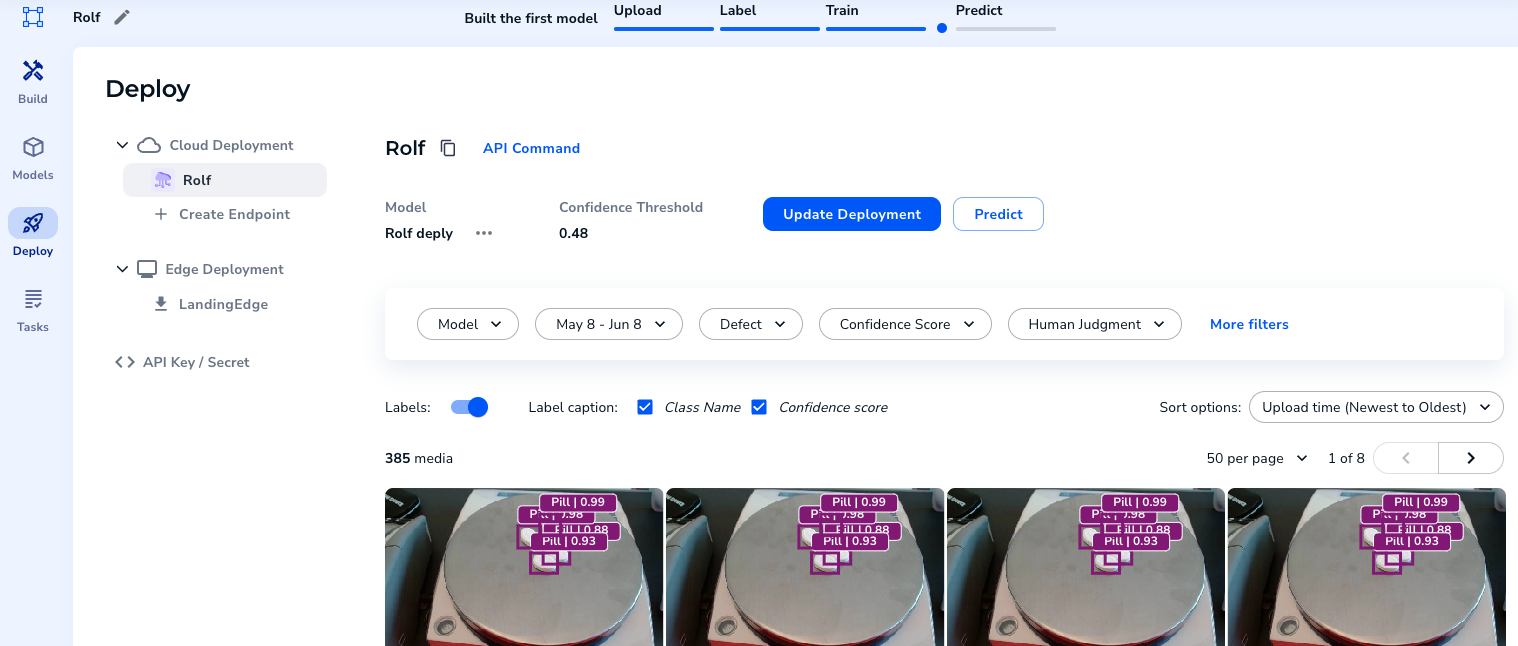

- Create a new project.

-

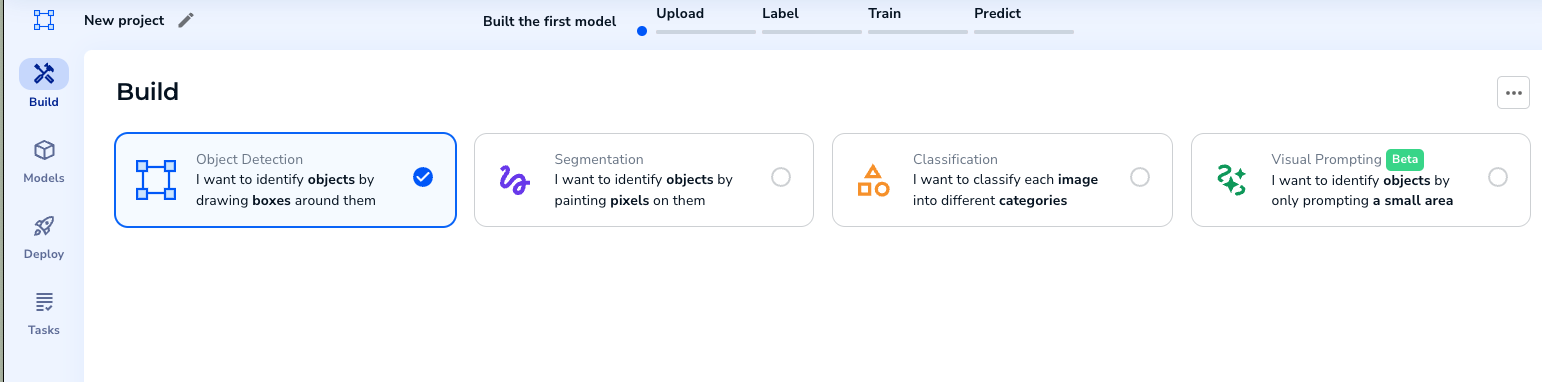

Select an object detection project.

-

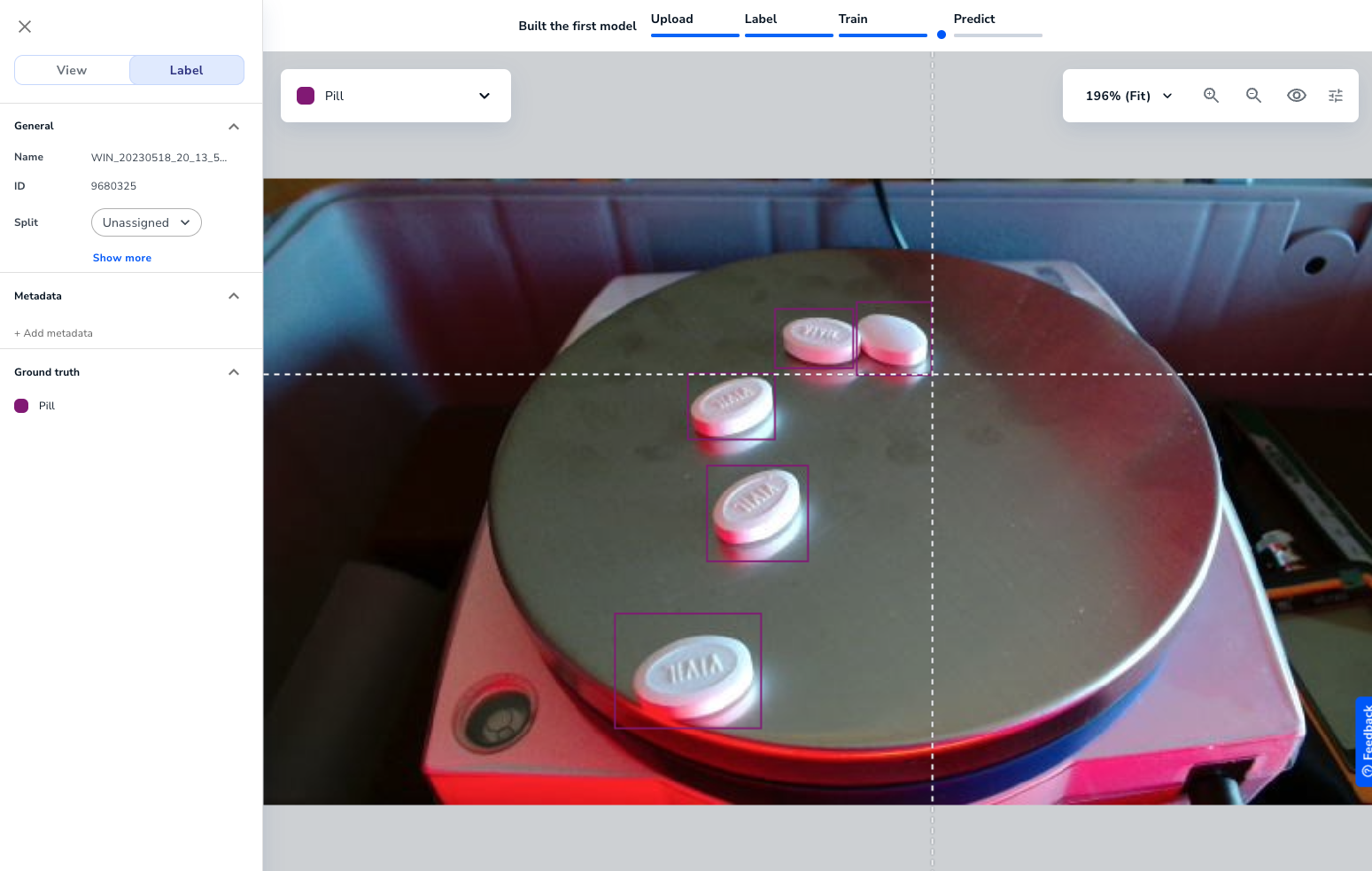

Upload your training images, you only need 10. Then label the images identifying the objects.

Once enough images are labeled, you will be able to train the model.

-

After training the model you can deploy it and create an endpoint.

-

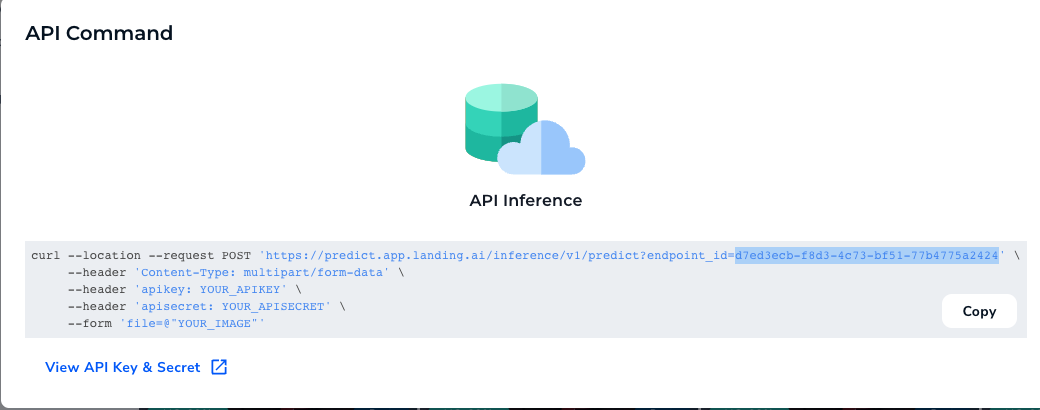

If you select "API Command" you will be able to view the endpoint

Copy the API key and secret.

How it works

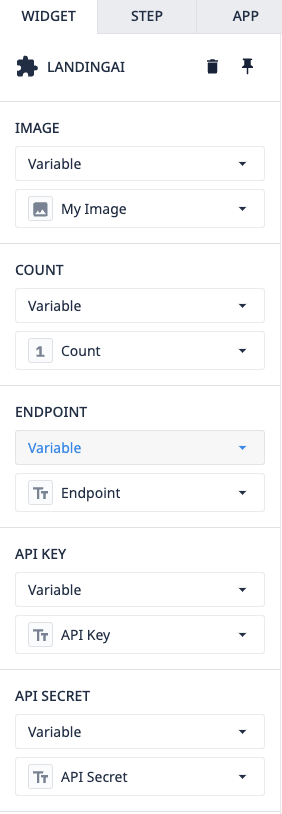

The application and widget itself is easy to use. The widget has a few props that come prepopulated.

Image - This is the image that you will send to LandingLens from Tulip

Count - This variable is written to by the widget and represents the number of objects detected by the model

Endpoint - This is copy/pasted from your project

API Key & Secret - These are copy/pasted from your deployed project

If all of these props are filled in, the widget will send a request to LandingAI's API and return an image with the objects highlighted