Streamline sending data from Tulip to AWS for broader analytics and integrations opportunities

Purpose

This guide walks through step by step how to send Tulip data of any kind to AWS via API Gateway, Lambda function, and a Tulip connector function.

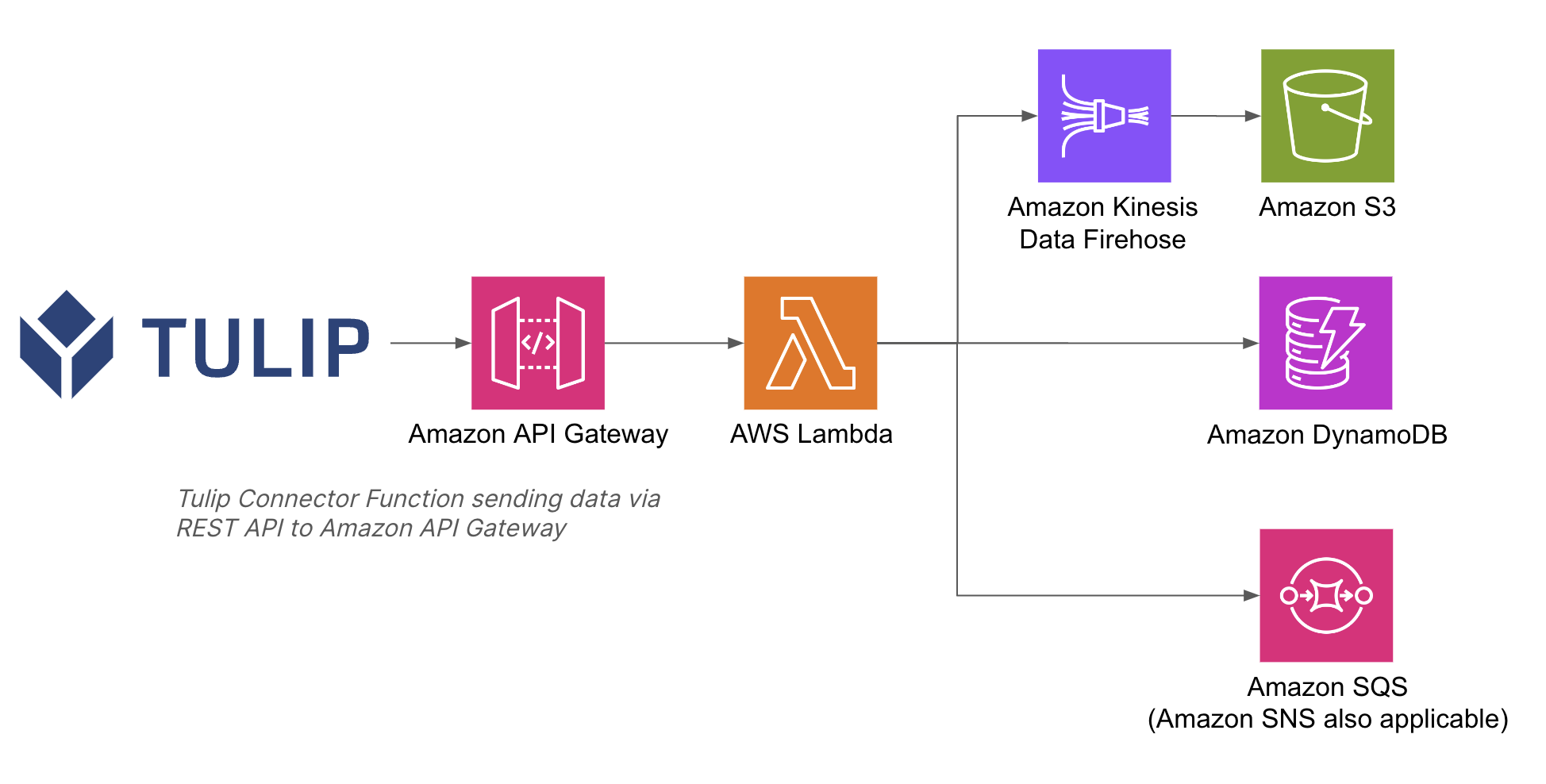

An example architecture is listed below:

This is vital, because with API Gateway and Lambda functions, you don't need to authenticate databases with username and password on the Tulip side; you can rely on the IAM authentication methods inside of AWS. This also streamlines how to leverage other AWS services such as Redshift, DynamoDB, and more.

Setup

This example integration includes pushing data to AWS from Tulip via Connector Functions. There are alternative ways to fetch Tulip Tables data via the Tables API. This method enables app builders to send any data in an app to AWS via a connector function.

High-level requirements:

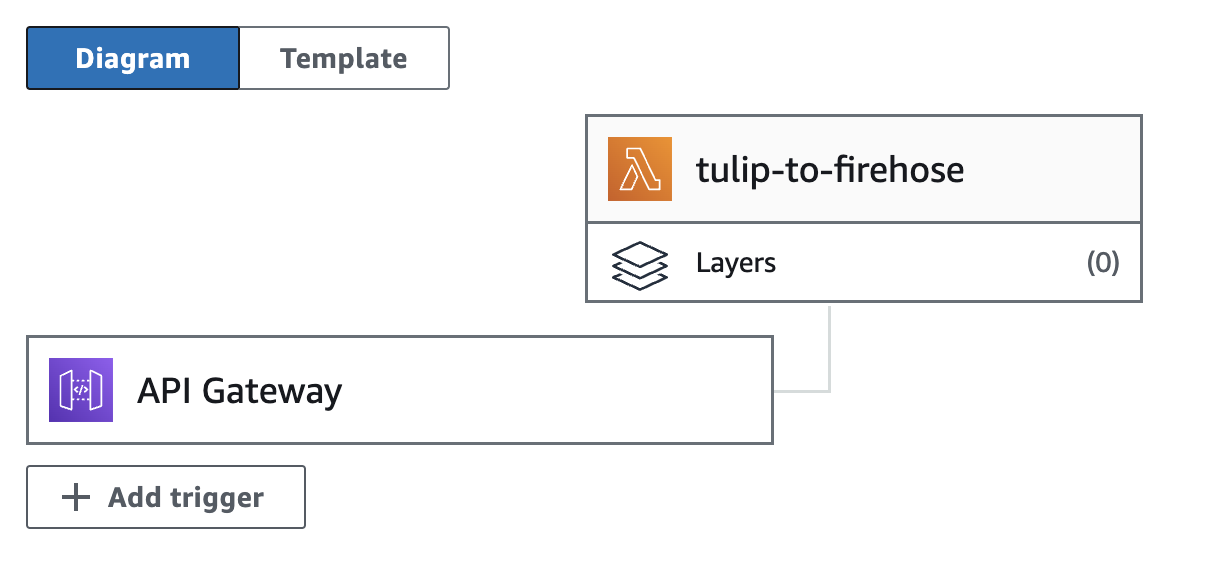

- Create an AWS Lambda function with an API Gateway as a trigger

- Obtain the Tulip Connector Function payload with something along the lines of the example script below

import json

import base64

def lambda_handler(event, context):

body = event['body']

data = json.loads(body)

# use the data variable to write to S3, Firehose,

# databases, and more

- The API Gateway can either be HTTP API or REST API depending on the security and complexity constraints. For example, the REST API option includes an api key authentication method while the HTTPS API only has JWT (JSON Web Token). Make sure the IAM role executing the lambda function has the appropriate permissions as well

- Then, add in whatever integrations are required. You can write the data to a database, S3, or a notification service from lambda functions

Use Cases and Next Steps

Once you have finalized the integration with lambda, you can easily analyze the data with a sagemaker notebook, QuickSight, or a variety of other tools.

1. Defect prediction

- Identify production defects before they happen and increase right first time.

- Identify core production drivers of quality in order to implement improvements

2. Cost of quality optimization

- Identify opportunities to optimize product design without impact customer satisfaction

3. Production energy optimization

- Identify production levers to optimal energy consumption

4. Delivery and planning prediction and optimization

- Optimize production schedule based on customer demand and real time order schedule

5. Global Machine / Line Benchmarking

- Benchmark similar machines or equipment with normalization

6. Global / regional digital performance management

- Consolidated data to create real time dashboards